Almost 1.5 years ago Spring Team has announced the decision of moving the most of Spring Cloud Netflix components into maintenance mode. It means that new features have no longer been added to these modules beginning from Greenwich Release Train. Currently, they are starting work on Ilford Release Train, which is removing such popular projects like Ribbon, Hystrix, or Zuul from Spring Cloud. The only module that will still be used is a Netflix discovery server — Eureka.

This change is significant for Spring Cloud since from beginning it was recognized by its integration with Netflix components. Moreover, Spring Cloud Netflix is still the most popular Spring Cloud project on GitHub (~4k stars). Continue reading “A New Era Of Spring Cloud”

Tag: Eureka

The Future of Spring Cloud Microservices After Netflix Era

If somebody would ask you about Spring Cloud, the first thing that comes into your mind will probably be Netflix OSS support. Support for such tools like Eureka, Zuul or Ribbon is provided not only by Spring, but also by some other popular frameworks used for building microservices architecture like Apache Camel, Vert.x or Micronaut. Currently, Spring Cloud Netflix is the most popular project being a part of Spring Cloud. It has around 3.2k stars on GitHub, while the second best has around 1.4k. Therefore, it is quite surprising that Pivotal has announced that most of Spring Cloud Netflix modules are entering maintenance mode. You can read more about in the post published on the Spring blog by Spencer Gibb https://spring.io/blog/2018/12/12/spring-cloud-greenwich-rc1-available-now. Continue reading “The Future of Spring Cloud Microservices After Netflix Era”

Kotlin Microservices with Micronaut, Spring Cloud and JPA

Micronaut Framework provides support for Kotlin built upon Kapt compiler plugin. It also implements the most popular cloud-native patterns like distributed configuration, service discovery and client-side load balancing. These features allows to include your application built on top of Micronaut into the existing microservices-based system. The most popular example of such approach may be an integration with Spring Cloud ecosystem. If you have already used Spring Cloud, it is very likely you built your microservices-based architecture using Eureka discovery server and Spring Cloud Config as a configuration server. Beginning from version 1.1 Micronaut supports both these popular tools being a part of Spring Cloud project. That’s a good news, because in version 1.0 the only supported distributed solution was Consul, and there were no possibility to use Eureka discovery together with Consul property source (running them together ends with exception). Continue reading “Kotlin Microservices with Micronaut, Spring Cloud and JPA”

Spring Boot Autoscaler

One of more important reasons we are deciding to use such a tools like Kubernetes, Pivotal Cloud Foundry or HashiCorp’s Nomad is an availability of auto-scaling our applications. Of course those tools provides many other useful mechanisms, but we can implement auto-scaling by ourselves. At first glance it seems to be difficult, but assuming we use Spring Boot as a framework for building our applications and Jenkins as a CI server, it finally does not require a lot of work. Today, I’m going to show you how to implement such a solutions using the following frameworks/tools:

- Spring Boot

- Spring Boot Actuator

- Spring Cloud Netflix Eureka

- Jenkins CI

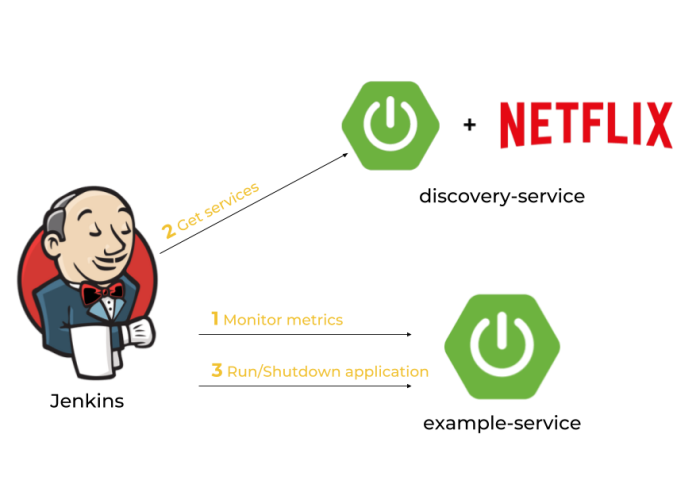

How it works?

Every Spring Boot application, which contains Spring Boot Actuator library can expose metrics under endpoint /actuator/metrics. There are many valuable metrics that gives you the detailed information about an application status. Some of them may be especially important when talking about autoscaling: JVM, CPU metrics, a number of running threads and a number of incoming HTTP requests. There is dedicated Jenkins pipeline responsible for monitoring application’s metrics by polling endpoint /actuator/metrics periodically. If any monitored metrics is below or above target range it runs new instance or shutdown a running instance of application using another Actuator endpoint /actuator/shutdown. Before that, it needs to fetch the current list of running instances of a single application in order to get an address of existing application selected for shutting down or the address of server with the smallest number of running instances for a new instance of application..

After discussing an architecture of our system we may proceed to the development. Our application needs to meet some requirements: it has to expose metrics and endpoint for graceful shutdown, it needs to register in Eureka after after startup and deregister on shutdown, and finally it also should dynamically allocate running port randomly from the pool of free ports. Thanks to Spring Boot we may easily implement all these mechanisms if five minutes 🙂

Dynamic port allocation

Since it is possible to run many instances of application on a single machine we have to guarantee that there won’t be conflicts in port numbers. Fortunately, Spring Boot provides such mechanisms for an application. We just need to set port number to 0 inside application.yml file using server.port property. Because our application registers itself in eureka it also needs to send unique instanceId, which is by default generated as a concatenation of fields spring.cloud.client.hostname, spring.application.name and server.port.

Here’s current configuration of our sample application. I have changed the template of instanceId field by replacing number of port to randomly generated number.

spring:

application:

name: example-service

server:

port: ${PORT:0}

eureka:

instance:

instanceId: ${spring.cloud.client.hostname}:${spring.application.name}:${random.int[1,999999]}

Enabling Actuator metrics

To enable Spring Boot Actuator we need to include the following dependency to pom.xml.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-actuator</artifactId> </dependency>

We also have to enable exposure of actuator endpoints via HTTP API by setting property management.endpoints.web.exposure.include to '*'. Now, the list of all available metric names is available under context path /actuator/metrics, while detailed information for each metric under path /actuator/metrics/{metricName}.

Graceful shutdown

Besides metrics Spring Boot Actuator also provides endpoint for shutting down an application. However, in contrast to other endpoints this endpoint is not available by default. We have to set property management.endpoint.shutdown.enabled to true. After that we will be to stop our application by sending POST request to /actuator/shutdown endpoint.

This method of stopping application guarantees that service will unregister itself from Eureka server before shutdown.

Enabling Eureka discovery

Eureka is the most popular discovery server used for building microservices-based architecture with Spring Cloud. So, if you already have microservices and want to provide auto-scaling mechanisms for them, Eureka would be a natural choice. It contains IP address and port number of every registered instance of application. To enable Eureka on the client side you just need to include the following dependency to your pom.xml.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-eureka-client</artifactId> </dependency>

As I have mentioned before we also have to guarantee an uniqueness of instanceId send to Eureka server by client-side application. It has been described in the step “Dynamic port allocation”.

The next step is to create application with embedded Eureka server. To achieve it we first need to include the following dependency into pom.xml.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-eureka-server</artifactId> </dependency>

The main class should be annotated with @EnableEurekaServer.

@SpringBootApplication

@EnableEurekaServer

public class DiscoveryApp {

public static void main(String[] args) {

new SpringApplicationBuilder(DiscoveryApp.class).run(args);

}

}

Client-side applications by default tries to connect with Eureka server on localhost under port 8761. We only need single, standalone Eureka node, so we will disable registration and attempts to fetching list of services form another instances of server.

spring:

application:

name: discovery-service

server:

port: ${PORT:8761}

eureka:

instance:

hostname: localhost

client:

registerWithEureka: false

fetchRegistry: false

serviceUrl:

defaultZone: http://localhost:8761/eureka/

The tests of the sample autoscaling system will be performed using Docker containers, so we need to prepare and build image with Eureka server. Here’s Dockerfile with image definition. It can be built using command docker build -t piomin/discovery-server:2.0 ..

FROM openjdk:8-jre-alpine ENV APP_FILE discovery-service-1.0-SNAPSHOT.jar ENV APP_HOME /usr/apps EXPOSE 8761 COPY target/$APP_FILE $APP_HOME/ WORKDIR $APP_HOME ENTRYPOINT ["sh", "-c"] CMD ["exec java -jar $APP_FILE"]

Building Jenkins pipeline for autoscaling

The first step is to prepare Jenkins pipeline responsible for autoscaling. We will create Jenkins Declarative Pipeline, which runs every minute. Periodical execution may be configured with the triggers directive, that defines the automated ways in which the pipeline should be re-triggered. Our pipeline will communicate with Eureka server and metrics endpoints exposed by every microservice using Spring Boot Actuator.

The test service name is EXAMPLE-SERVICE, which is equal to value (big letters) of property spring.application.name defined inside application.yml file. The monitored metric is the number of HTTP listener threads running on Tomcat container. These threads are responsible for processing incoming HTTP requests.

pipeline {

agent any

triggers {

cron('* * * * *')

}

environment {

SERVICE_NAME = "EXAMPLE-SERVICE"

METRICS_ENDPOINT = "/actuator/metrics/tomcat.threads.busy?tag=name:http-nio-auto-1"

SHUTDOWN_ENDPOINT = "/actuator/shutdown"

}

stages { ... }

}

Integrating Jenkins pipeline with Eureka

The first stage of our pipeline is responsible for fetching list of services registered in service discovery server. Eureka exposes HTTP API with several endpoints. One of them is GET /eureka/apps/{serviceName}, which returns list of all instances of application with given name. We are saving the number of running instances and the URL of metrics endpoint of every single instance. These values would be accessed during next stages of pipeline.

Here’s the fragment of pipeline responsible for fetching list of running instances of application. The name of stage is Calculate. We use HTTP Request Plugin for HTTP connections.

stage('Calculate') {

steps {

script {

def response = httpRequest "http://192.168.99.100:8761/eureka/apps/${env.SERVICE_NAME}"

def app = printXml(response.content)

def index = 0

env["INSTANCE_COUNT"] = app.instance.size()

app.instance.each {

if (it.status == 'UP') {

def address = "http://${it.ipAddr}:${it.port}"

env["INSTANCE_${index++}"] = address

}

}

}

}

}

@NonCPS

def printXml(String text) {

return new XmlSlurper(false, false).parseText(text)

}

Here’s a sample response from Eureka API for our microservice. The response content type is XML.

Integrating Jenkins pipeline with Spring Boot Actuator metrics

Spring Boot Actuator exposes endpoint with metrics, which allows to find metric by name and optionally by tag. In the fragment of pipeline visible below I’m trying to find the instance with metric below or above a defined threshold. If there is such an instance we stop the loop in order to proceed to the next stage, which performs scaling down or up. The ip addresses of running applications are taken from pipeline environment variable with prefix INSTANCE_, which has been saved in the previous stage.

stage('Metrics') {

steps {

script {

def count = env.INSTANCE_COUNT

for(def i=0; i<count; i++) {

def ip = env["INSTANCE_${i}"] + env.METRICS_ENDPOINT

if (ip == null)

break;

def response = httpRequest ip

def objRes = printJson(response.content)

env.SCALE_TYPE = returnScaleType(objRes)

if (env.SCALE_TYPE != "NONE")

break

}

}

}

}

@NonCPS

def printJson(String text) {

return new JsonSlurper().parseText(text)

}

def returnScaleType(objRes) {

def value = objRes.measurements[0].value

if (value.toInteger() > 100)

return "UP"

else if (value.toInteger() < 20)

return "DOWN"

else

return "NONE"

}

Shutdown application instance

In the last stage of our pipeline we will shutdown the running instance or start new instance depending on the result saved in the previous stage. Shutdown may be easily performed by calling Spring Boot Actuator endpoint. In the following fragment of pipeline we pick the instance returned by Eureka as first. Then we send POST request to that ip address.

If we need to scale up our application we call another pipeline responsible for build fat JAR and launch it on our machine.

stage('Scaling') {

steps {

script {

if (env.SCALE_TYPE == 'DOWN') {

def ip = env["INSTANCE_0"] + env.SHUTDOWN_ENDPOINT

httpRequest url:ip, contentType:'APPLICATION_JSON', httpMode:'POST'

} else if (env.SCALE_TYPE == 'UP') {

build job:'spring-boot-run-pipeline'

}

currentBuild.description = env.SCALE_TYPE

}

}

}

Here’s a full definition of our pipeline spring-boot-run-pipeline responsible for starting new instance of application. It clones the repository with application source code, builds binaries using Maven commands, and finally runs the application using java -jar command passing address of Eureka server as a parameter.

pipeline {

agent any

tools {

maven 'M3'

}

stages {

stage('Checkout') {

steps {

git url: 'https://github.com/piomin/sample-spring-boot-autoscaler.git', credentialsId: 'github-piomin', branch: 'master'

}

}

stage('Build') {

steps {

dir('example-service') {

sh 'mvn clean package'

}

}

}

stage('Run') {

steps {

dir('example-service') {

sh 'nohup java -jar -DEUREKA_URL=http://192.168.99.100:8761/eureka target/example-service-1.0-SNAPSHOT.jar 1>/dev/null 2>logs/runlog &'

}

}

}

}

}

Remote extension

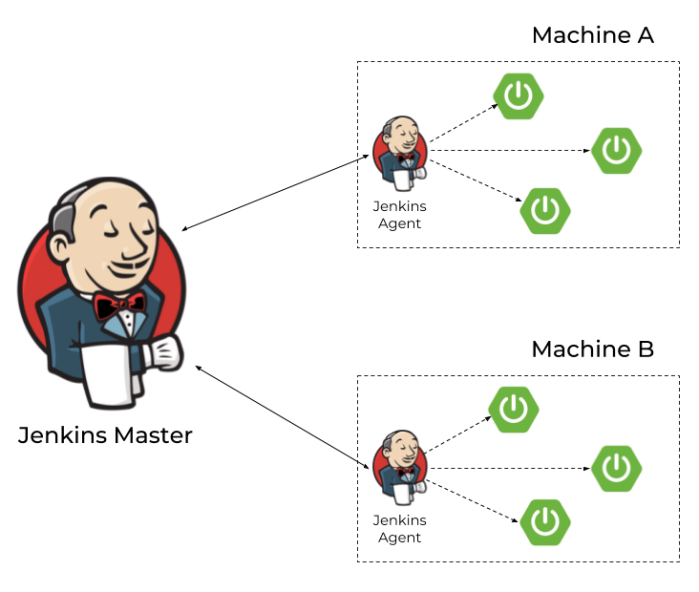

The algorithm discussed in the previous sections will work fine only for microservices launched on the single machine. If we would like to extend it to work with many machines, we will have to modify our architecture as shown below. Each machine has Jenkins agent running and communicating with Jenkins master. If we would like to start new instance of microservices on the selected machine, we have to run pipeline using agent running on that machine. This agent is responsible only for building application from source code and launching it on the target machine. The shutdown of instance is still performed just by calling HTTP endpoint.

You can find more information about running Jenkins agents and connecting them with Jenkins master via JNLP protocol in my article Jenkins nodes on Docker containers. Assuming we have successfully launched some agents on the target machines we need to parametrize our pipelines in order to be able to select agent (and therefore the target machine) dynamically.

When we are scaling up our application we have to pass agent label to the downstream pipeline.

build job:'spring-boot-run-pipeline', parameters:[string(name: 'agent', value:"slave-1")]

The calling pipeline will be ran by agent labelled with given parameter.

pipeline {

agent {

label "${params.agent}"

}

stages { ... }

}

If we have more than one agent connected to the master node we can map their addresses into the labels. Thanks to that you would be able to map IP address of microservice instance fetched from Eureka to the target machine with Jenkins agent.

pipeline {

agent any

triggers {

cron('* * * * *')

}

environment {

SERVICE_NAME = "EXAMPLE-SERVICE"

METRICS_ENDPOINT = "/actuator/metrics/tomcat.threads.busy?tag=name:http-nio-auto-1"

SHUTDOWN_ENDPOINT = "/actuator/shutdown"

AGENT_192.168.99.102 = "slave-1"

AGENT_192.168.99.103 = "slave-2"

}

stages { ... }

}

Summary

In this article I have demonstrated how to use Spring Boot Actuator metrics in order to scale up/scale down your Spring Boot application. Using basic mechanisms provided by Spring Boot together with Spring Cloud Netflix Eureka and Jenkins you can implement auto-scaling for your applications without getting any other third-party tools. The case described in this article assumes using Jenkins agents on the remote machines to launch there new instance of application, but you may as well use a tool like Ansible for that. If you would decide to run Ansible playbooks from Jenkins you will not have to launch Jenkins agents on remote machines. The source code with sample applications is available on GitHub: https://github.com/piomin/sample-spring-boot-autoscaler.git.

Secure Discovery with Spring Cloud Netflix Eureka

Building standard discovery mechanism basing on Spring Cloud Netflix Eureka is rather an easy thing to do. The same solution built over secure SSL communication between discovery client and server may be slightly more advanced challenge. I haven’t find any any complete example of such an application on web. Let’s try to implement it beginning from the server-side application.

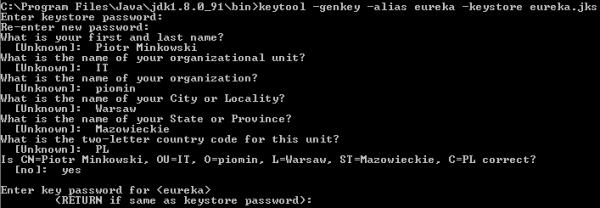

1. Generate certificates

If you develop Java applications for some years you have probably heard about keytool. This tool is available in your ${JAVA_HOME}\bin directory, and is designed for managing keys and certificates. We begin from generating keystore for server-side Spring Boot application. Here’s the appropriate keytool command that generates certficate stored inside JKS keystore file named eureka.jks.

2. Setting up a secure discovery server

Since Eureka server is embedded to Spring Boot application, we need to secure it using standard Spring Boot properties. I placed generated keystore file eureka.jks on the application’s classpath. Now, the only thing that has to be done is to prepare some configuration settings inside application.yml that point to keystore file location, type, and access password.

server:

port: 8761

ssl:

enabled: true

key-store: classpath:eureka.jks

key-store-password: 123456

trust-store: classpath:eureka.jks

trust-store-password: 123456

key-alias: eureka

3. Setting up two-way SSL authentication

We will complicate our example a little. A standard SSL configuration assumes that only the client verifies the server certificate. We will force client’s certificate authentication on the server-side. It can be achieved by setting the property server.ssl.client-auth to need.

server:

ssl:

client-auth: need

It’s not all, because we also have to add client’s certficate to the list of trusted certificates on the server-side. So, first let’s generate client’s keystore using the same keytool command as for server’s keystore.

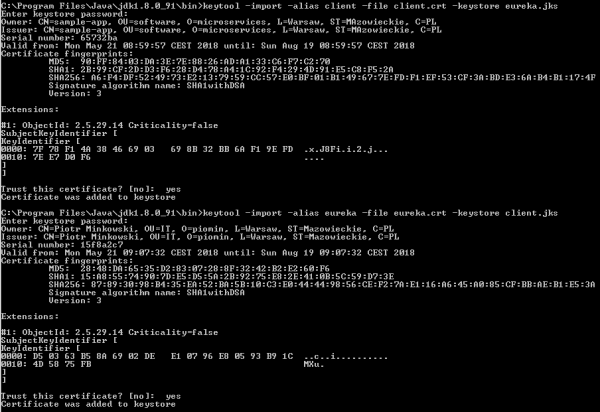

Now, we need to export certficates from generated keystores for both client and server sides.

Finally, we import client’s certficate to server’s keystore and server’s certficate to client’s keystore.

4. Running secure Eureka server

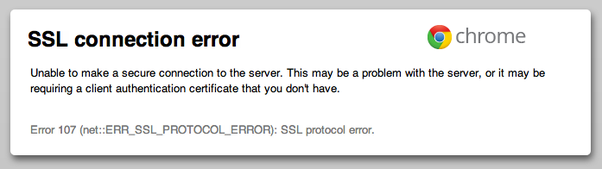

The sample applications are available on GitHub in repository sample-secure-eureka-discovery (https://github.com/piomin/sample-secure-eureka-discovery.git). After running discovery-service application, Eureka is available under address https://localhost:8761. If you try to visit its web dashboard you get the following exception in your web browser. It means Eureka server is secured.

Well, Eureka dashboard is sometimes an useful tool, so let’s import client’s keystore to our web browser to be able to access it. We have to convert client’s keystore from JKS to PKCS12 format. Here’s the command that performs mentioned operation.

$ keytool -importkeystore -srckeystore client.jks -destkeystore client.p12 -srcstoretype JKS -deststoretype PKCS12 -srcstorepass 123456 -deststorepass 123456 -srcalias client -destalias client -srckeypass 123456 -destkeypass 123456 -noprompt

5. Client’s application configuration

When implementing secure connection on the client side, we generally need to do the same as in the previous step – import a keystore. However, it is not very simple thing to do, because Spring Cloud does not provide any configuration property that allows you to pass the location of SSL keystore to a discovery client. What’s worth mentioning Eureka client leverages Jersey client to communicate with server-side application. It may be surprising a little it is not Spring RestTemplate, but we should remember that Spring Cloud Eureka is built on top of Netflix OSS Eureka client, which does not use Spring libraries.

HTTP basic authentication is automatically added to your eureka client if you include security credentials to connection URL, for example http://piotrm:12345@localhost:8761/eureka. For more advanced configuration, like passing SSL keystore to HTTP client we need to provide @Bean of type DiscoveryClientOptionalArgs.

The following fragment of code shows how to enable SSL connection for discovery client. First, we set location of keystore and truststore files using javax.net.ssl.* Java system property. Then, we provide custom implementation of Jersey client based on Java SSL settings, and set it for DiscoveryClientOptionalArgs bean.

@Bean

public DiscoveryClient.DiscoveryClientOptionalArgs discoveryClientOptionalArgs() throws NoSuchAlgorithmException {

DiscoveryClient.DiscoveryClientOptionalArgs args = new DiscoveryClient.DiscoveryClientOptionalArgs();

System.setProperty("javax.net.ssl.keyStore", "src/main/resources/client.jks");

System.setProperty("javax.net.ssl.keyStorePassword", "123456");

System.setProperty("javax.net.ssl.trustStore", "src/main/resources/client.jks");

System.setProperty("javax.net.ssl.trustStorePassword", "123456");

EurekaJerseyClientBuilder builder = new EurekaJerseyClientBuilder();

builder.withClientName("account-client");

builder.withSystemSSLConfiguration();

builder.withMaxTotalConnections(10);

builder.withMaxConnectionsPerHost(10);

args.setEurekaJerseyClient(builder.build());

return args;

}

6. Enabling HTTPS on the client side

The configuration provided in the previous step applies only to communication between discovery client and Eureka server. What if we also would like to secure HTTP endpoints exposed by the client-side application? The first step is pretty the same as for the discovery server: we need to generate keystore and set it using Spring Boot properties inside application.yml.

server:

port: ${PORT:8090}

ssl:

enabled: true

key-store: classpath:client.jks

key-store-password: 123456

key-alias: client

During registration we need to “inform” Eureka server that our application’s endpoints are secured. To achieve it we should set property eureka.instance.securePortEnabled to true, and also disable non secure port, which is enabled by default.with nonSecurePortEnabled property.

eureka:

instance:

nonSecurePortEnabled: false

securePortEnabled: true

securePort: ${server.port}

statusPageUrl: https://localhost:${server.port}/info

healthCheckUrl: https://localhost:${server.port}/health

homePageUrl: https://localhost:${server.port}

client:

securePortEnabled: true

serviceUrl:

defaultZone: https://localhost:8761/eureka/

7. Running client’s application

Finally, we can run client-side application. After launching the application should be visible in Eureka Dashboard.

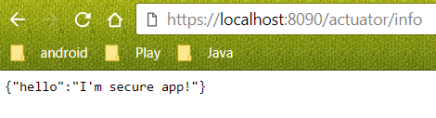

All the client application’s endpoints are registred in Eureka under HTTPS protocol. I have also override default implementation of actuator endpoint /info, as shown on the code fragment below.

@Component

public class SecureInfoContributor implements InfoContributor {

@Override

public void contribute(Builder builder) {

builder.withDetail("hello", "I'm secure app!");

}

}

Now, we can try to visit /info endpoint one more time. You should see the same information as below.

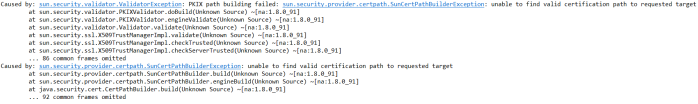

Alternatively, if you try to set on the client-side the certificate, which is not trusted by server-side, you will see the following exception while starting your client application.

Conclusion

Securing connection between microservices and Eureka server is only the first step of securing the whole system. We need to thing about secure connection between microservices and config server, and also between all microservices during inter-service communication with @LoadBalanced RestTemplate or OpenFeign client. You can find the examples of such implementations and many more in my book “Mastering Spring Cloud” (https://www.packtpub.com/application-development/mastering-spring-cloud).

Mastering Spring Cloud

Let me share with you the result of my last couple months of work – the book published on 26th April by Packt. The book Mastering Spring Cloud is strictly linked to the topics frequently published in this blog – it describes how to build microservices using Spring Cloud framework. I tried to create this book in well-known style of writing from this blog, where I focus on giving you the practical samples of working code without unnecessary small-talk and scribbles 🙂 If you like my style of writing, and in addition you are interested in Spring Cloud framework and microservices, this book is just for you 🙂

The book consists of fifteen chapters, where I have guided you from the basic to the most advanced examples illustrating use cases for almost all projects being a part of Spring Cloud. While creating a blog posts I not always have time to go into all the details related to Spring Cloud. I’m trying to describe a lot of different, interesting trends and solutions in the area of Java development. The book describes many details related to the most important projects of Spring Cloud like service discovery, distributed configuration, inter-service communication, security, logging, testing or continuous delivery. It is available on http://www.packtpub.com site: https://www.packtpub.com/application-development/mastering-spring-cloud. The detailed description of all the topics raised in that book is available on that site.

Personally, I particulary recommend to read the following more advanced subjects described in the book:

- Peer-to-peer replication between multiple instances of Eureka servers, and using zoning mechanism in inter-service communication

- Automatically reloading configuration after changes with Spring Cloud Config push notifications mechanism based on Spring Cloud Bus

- Advanced configuration of inter-service communication with Ribbon client-side load balancer and Feign client

- Enabling SSL secure communication between microservices and basic elements of microservices-based architecture like service discovery or configuration server

- Building messaging microservices based on publish/subscribe communication model including cunsumer grouping, partitioning and scaling with Spring Cloud Stream and message brokers (Apache Kafka, RabbitMQ)

- Setting up continuous delivery for Spring Cloud microservices with Jenkins and Docker

- Using Docker for running Spring Cloud microservices on Kubernetes platform simulated locally by Minikube

- Deploying Spring Cloud microservices on cloud platforms like Pivotal Web Services (Pivotal Cloud Foundry hosted cloud solution) and Heroku

Those examples and many others are available together with this book. At the end, a short description taken from packtpub.com site:

Developing, deploying, and operating cloud applications should be as easy as local applications. This should be the governing principle behind any cloud platform, library, or tool. Spring Cloud–an open-source library–makes it easy to develop JVM applications for the cloud. In this book, you will be introduced to Spring Cloud and will master its features from the application developer’s point of view.

Quick Guide to Microservices with Spring Boot 2.0, Eureka and Spring Cloud

There are many articles on my blog about microservices with Spring Boot and Spring Cloud. The main purpose of this article is to provide a brief summary of the most important components provided by these frameworks that help you in creating microservices and in fact explain you what is Spring Cloud for microservices architecture. The topics covered in this article are:

- Using Spring Boot 2 in cloud-native development

- Providing service discovery for all microservices with Spring Cloud Netflix Eureka

- Distributed configuration with Spring Cloud Config

- API Gateway pattern using a new project inside Spring Cloud: Spring Cloud Gateway

- Correlating logs with Spring Cloud Sleuth

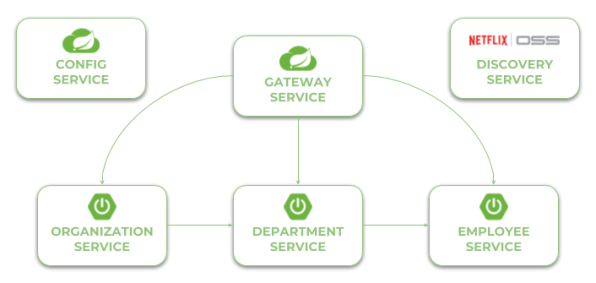

Before we proceed to the source code, let’s take a look on the following diagram. It illustrates the architecture of our sample system. We have three independent microservices, which register themself in service discovery, fetch properties from configuration service and communicate with each other. The whole system is hidden behind API gateway.

Currently, the newest version of Spring Cloud is Finchley.M9. This version of spring-cloud-dependencies should be declared as a BOM for dependency management.

<dependencyManagement> <dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>Finchley.M9</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement>

Now, let’s consider the further steps to be taken in order to create working microservices-based system using Spring Cloud. We will begin from Configuration Server.

The source code of sample applications presented in this article is available on GitHub in repository https://github.com/piomin/sample-spring-microservices-new.git.

Step 1. Building configuration server with Spring Cloud Config

To enable Spring Cloud Config feature for an application, first include spring-cloud-config-server to your project dependencies.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-config-server</artifactId> </dependency>

Then enable running embedded configuration server during application boot use @EnableConfigServer annotation.

@SpringBootApplication

@EnableConfigServer

public class ConfigApplication {

public static void main(String[] args) {

new SpringApplicationBuilder(ConfigApplication.class).run(args);

}

}

By default Spring Cloud Config Server store the configuration data inside Git repository. This is very good choice in production mode, but for the sample purpose file system backend will be enough. It is really easy to start with config server, because we can place all the properties in the classpath. Spring Cloud Config by default search for property sources inside the following locations: classpath:/, classpath:/config, file:./, file:./config.

We place all the property sources inside src/main/resources/config. The YAML filename will be the same as the name of service. For example, YAML file for discovery-service will be located here: src/main/resources/config/discovery-service.yml.

And last two important things. If you would like to start config server with file system backend you have activate Spring Boot profile native. It may be achieved by setting parameter --spring.profiles.active=native during application boot. I have also changed the default config server port (8888) to 8061 by setting property server.port in bootstrap.yml file.

Step 2. Building service discovery with Spring Cloud Netflix Eureka

More to the point of configuration server. Now, all other applications, including discovery-service, need to add spring-cloud-starter-config dependency in order to enable config client. We also have to include dependency to spring-cloud-starter-netflix-eureka-server.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-eureka-server</artifactId> </dependency>

Then you should enable running embedded discovery server during application boot by setting @EnableEurekaServer annotation on the main class.

@SpringBootApplication

@EnableEurekaServer

public class DiscoveryApplication {

public static void main(String[] args) {

new SpringApplicationBuilder(DiscoveryApplication.class).run(args);

}

}

Application has to fetch property source from configuration server. The minimal configuration required on the client side is an application name and config server’s connection settings.

spring:

application:

name: discovery-service

cloud:

config:

uri: http://localhost:8088

As I have already mentioned, the configuration file discovery-service.yml should be placed inside config-service module. However, it is required to say a few words about the configuration visible below. We have changed Eureka running port from default value (8761) to 8061. For standalone Eureka instance we have to disable registration and fetching registry.

server:

port: 8061

eureka:

instance:

hostname: localhost

client:

registerWithEureka: false

fetchRegistry: false

serviceUrl:

defaultZone: http://${eureka.instance.hostname}:${server.port}/eureka/

Now, when you are starting your application with embedded Eureka server you should see the following logs.

Once you have succesfully started application you may visit Eureka Dashboard available under address http://localhost:8061/.

Step 3. Building microservice using Spring Boot and Spring Cloud

Our microservice has te perform some operations during boot. It needs to fetch configuration from config-service, register itself in discovery-service, expose HTTP API and automatically generate API documentation. To enable all these mechanisms we need to include some dependencies in pom.xml. To enable config client we should include starter spring-cloud-starter-config. Discovery client will be enabled for microservice after including spring-cloud-starter-netflix-eureka-client and annotating the main class with @EnableDiscoveryClient. To force Spring Boot application generating API documentation we should include springfox-swagger2 dependency and add annotation @EnableSwagger2.

Here is the full list of dependencies defined for my sample microservice.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-eureka-client</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-config</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger2</artifactId> <version>2.8.0</version> </dependency>

And here is the main class of application that enables Discovery Client and Swagger2 for the microservice.

@SpringBootApplication

@EnableDiscoveryClient

@EnableSwagger2

public class EmployeeApplication {

public static void main(String[] args) {

SpringApplication.run(EmployeeApplication.class, args);

}

@Bean

public Docket swaggerApi() {

return new Docket(DocumentationType.SWAGGER_2)

.select()

.apis(RequestHandlerSelectors.basePackage("pl.piomin.services.employee.controller"))

.paths(PathSelectors.any())

.build()

.apiInfo(new ApiInfoBuilder().version("1.0").title("Employee API").description("Documentation Employee API v1.0").build());

}

...

}

Application has to fetch configuration from a remote server, so we should only provide bootstrap.yml file with service name and server URL. In fact, this is the example of Config First Bootstrap approach, when an application first connects to a config server and takes a discovery server address from a remote property source. There is also Discovery First Bootstrap, where a config server address is fetched from a discovery server.

spring:

application:

name: employee-service

cloud:

config:

uri: http://localhost:8088

There is no much configuration settings. Here’s application’s configuration file stored on a remote server. It stores only HTTP running port and Eureka URL. However, I also placed file employee-service-instance2.yml on remote config server. It sets different HTTP port for application, so you can esily run two instances of the same service locally basing on remote properties. Now, you may run the second instance of employee-service on port 9090 after passing argument spring.profiles.active=instance2 during an application startup. With default settings you will start the microservice on port 8090.

server:

port: 9090

eureka:

client:

serviceUrl:

defaultZone: http://localhost:8061/eureka/

Here’s the code with implementation of REST controller class. It provides an implementation for adding new employee and searching for employee using different filters.

@RestController

public class EmployeeController {

private static final Logger LOGGER = LoggerFactory.getLogger(EmployeeController.class);

@Autowired

EmployeeRepository repository;

@PostMapping

public Employee add(@RequestBody Employee employee) {

LOGGER.info("Employee add: {}", employee);

return repository.add(employee);

}

@GetMapping("/{id}")

public Employee findById(@PathVariable("id") Long id) {

LOGGER.info("Employee find: id={}", id);

return repository.findById(id);

}

@GetMapping

public List findAll() {

LOGGER.info("Employee find");

return repository.findAll();

}

@GetMapping("/department/{departmentId}")

public List findByDepartment(@PathVariable("departmentId") Long departmentId) {

LOGGER.info("Employee find: departmentId={}", departmentId);

return repository.findByDepartment(departmentId);

}

@GetMapping("/organization/{organizationId}")

public List findByOrganization(@PathVariable("organizationId") Long organizationId) {

LOGGER.info("Employee find: organizationId={}", organizationId);

return repository.findByOrganization(organizationId);

}

}

Step 4. Communication between microservice with Spring Cloud Open Feign

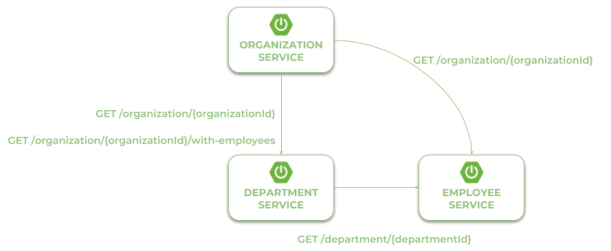

Our first microservice has been created and started. Now, we will add other microservices that communicate with each other. The following diagram illustrates the communication flow between three sample microservices: organization-service, department-service and employee-service. Microservice organization-service collect list of departments with (GET /organization/{organizationId}/with-employees) or without employees (GET /organization/{organizationId}) from department-service, and list of employees without dividing them into different departments directly from employee-service. Microservice department-service is able to collect list of employees assigned to the particular department.

In the scenario described above both organization-service and department-service have to localize other microservices and communicate with them. That’s why we need to include additional dependency for those modules: spring-cloud-starter-openfeign. Spring Cloud Open Feign is a declarative REST client that used Ribbon client-side load balancer in order to communicate with other microservice.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-openfeign</artifactId> </dependency>

The alternative solution to Open Feign is Spring RestTemplate with @LoadBalanced. However, Feign provides more ellegant way of defining client, so I prefer it instead of RestTemplate. After including the required dependency we should also enable Feign clients using @EnableFeignClients annotation.

@SpringBootApplication

@EnableDiscoveryClient

@EnableFeignClients

@EnableSwagger2

public class OrganizationApplication {

public static void main(String[] args) {

SpringApplication.run(OrganizationApplication.class, args);

}

...

}

Now, we need to define client’s interfaces. Because organization-service communicates with two other microservices we should create two interfaces, one per single microservice. Every client’s interface should be annotated with @FeignClient. One field inside annotation is required – name. This name should be the same as the name of target service registered in service discovery. Here’s the interface of the client that calls endpoint GET /organization/{organizationId} exposed by employee-service.

@FeignClient(name = "employee-service")

public interface EmployeeClient {

@GetMapping("/organization/{organizationId}")

List findByOrganization(@PathVariable("organizationId") Long organizationId);

}

The second client’s interface available inside organization-service calls two endpoints from department-service. First of them GET /organization/{organizationId} returns organization only with the list of available departments, while the second GET /organization/{organizationId}/with-employees return the same set of data including the list employees assigned to every department.

@FeignClient(name = "department-service")

public interface DepartmentClient {

@GetMapping("/organization/{organizationId}")

public List findByOrganization(@PathVariable("organizationId") Long organizationId);

@GetMapping("/organization/{organizationId}/with-employees")

public List findByOrganizationWithEmployees(@PathVariable("organizationId") Long organizationId);

}

Finally, we have to inject Feign client’s beans to the REST controller. Now, we may call the methods defined inside DepartmentClient and EmployeeClient, which is equivalent to calling REST endpoints.

@RestController

public class OrganizationController {

private static final Logger LOGGER = LoggerFactory.getLogger(OrganizationController.class);

@Autowired

OrganizationRepository repository;

@Autowired

DepartmentClient departmentClient;

@Autowired

EmployeeClient employeeClient;

...

@GetMapping("/{id}")

public Organization findById(@PathVariable("id") Long id) {

LOGGER.info("Organization find: id={}", id);

return repository.findById(id);

}

@GetMapping("/{id}/with-departments")

public Organization findByIdWithDepartments(@PathVariable("id") Long id) {

LOGGER.info("Organization find: id={}", id);

Organization organization = repository.findById(id);

organization.setDepartments(departmentClient.findByOrganization(organization.getId()));

return organization;

}

@GetMapping("/{id}/with-departments-and-employees")

public Organization findByIdWithDepartmentsAndEmployees(@PathVariable("id") Long id) {

LOGGER.info("Organization find: id={}", id);

Organization organization = repository.findById(id);

organization.setDepartments(departmentClient.findByOrganizationWithEmployees(organization.getId()));

return organization;

}

@GetMapping("/{id}/with-employees")

public Organization findByIdWithEmployees(@PathVariable("id") Long id) {

LOGGER.info("Organization find: id={}", id);

Organization organization = repository.findById(id);

organization.setEmployees(employeeClient.findByOrganization(organization.getId()));

return organization;

}

}

Step 5. Building API gateway using Spring Cloud Gateway

Spring Cloud Gateway is relatively new Spring Cloud project. It is built on top of Spring Framework 5, Project Reactor and Spring Boot 2.0. It requires the Netty runtime provided by Spring Boot and Spring Webflux. This is really nice alternative to Spring Cloud Netflix Zuul, which has been the only one Spring Cloud project providing API gateway for microservices until now.

API gateway is implemented inside module gateway-service. First, we should include starter spring-cloud-starter-gateway to the project dependencies.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-gateway</artifactId> </dependency>

We also need to have discovery client enabled, because gateway-service integrates with Eureka in order to be able to perform routing to the downstream services. Gateway will also expose API specification of all the endpoints exposed by our sample microservices. That’s why we enabled Swagger2 also on the gateway.

@SpringBootApplication

@EnableDiscoveryClient

@EnableSwagger2

public class GatewayApplication {

public static void main(String[] args) {

SpringApplication.run(GatewayApplication.class, args);

}

}

Spring Cloud Gateway provides three basic components used for configuration: routes, predicates and filters. Route is the basic building block of the gateway. It contains destination URI and list of defined predicates and filters. Predicate is responsible for matching on anything from the incoming HTTP request, such as headers or parameters. Filter may modify request and response before and after sending it to downstream services. All these components may be set using configuration properties. We will create and place on the confiration server file gateway-service.yml with the routes defined for our sample microservices.

But first, we should enable integration with discovery server for the routes by setting property spring.cloud.gateway.discovery.locator.enabled to true. Then we may proceed to defining the route rules. We use the Path Route Predicate Factory for matching the incoming requests, and the RewritePath GatewayFilter Factory for modifying the requested path to adapt it to the format exposed by downstream services. The uri parameter specifies the name of target service registered in discovery server. Let’s take a look on the following routes definition. For example, in order to make organization-service available on gateway under path /organization/**, we should define predicate Path=/organization/**, and then strip prefix /organization from the path, because the target service is exposed under path /**. The address of target service is fetched for Eureka basing uri value lb://organization-service.

spring:

cloud:

gateway:

discovery:

locator:

enabled: true

routes:

- id: employee-service

uri: lb://employee-service

predicates:

- Path=/employee/**

filters:

- RewritePath=/employee/(?.*), /$\{path}

- id: department-service

uri: lb://department-service

predicates:

- Path=/department/**

filters:

- RewritePath=/department/(?.*), /$\{path}

- id: organization-service

uri: lb://organization-service

predicates:

- Path=/organization/**

filters:

- RewritePath=/organization/(?.*), /$\{path}

Step 6. Enabling API specification on gateway using Swagger2

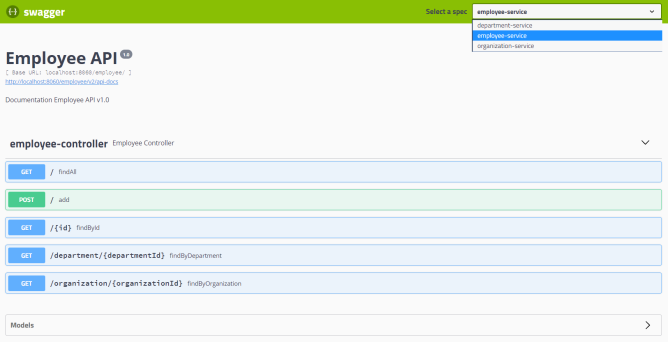

Every Spring Boot microservice that is annotated with @EnableSwagger2 exposes Swagger API documentation under path /v2/api-docs. However, we would like to have that documentation located in the single place – on API gateway. To achieve it we need to provide bean implementing SwaggerResourcesProvider interface inside gateway-service module. That bean is responsible for defining list storing locations of Swagger resources, which should be displayed by the application. Here’s the implementation of SwaggerResourcesProvider that takes the required locations from service discovery basing on the Spring Cloud Gateway configuration properties.

Unfortunately, SpringFox Swagger still does not provide support for Spring WebFlux. It means that if you include SpringFox Swagger dependencies to the project application will fail to start… I hope the support for WebFlux will be available soon, but now we have to use Spring Cloud Netflix Zuul as a gateway, if we would like to run embedded Swagger2 on it.

I created module proxy-service that is an alternative API gateway based on Netflix Zuul to gateway-service based on Spring Cloud Gateway. Here’s a bean with SwaggerResourcesProvider implementation available inside proxy-service. It uses ZuulProperties bean to dynamically load routes definition into the bean.

@Configuration

public class ProxyApi {

@Autowired

ZuulProperties properties;

@Primary

@Bean

public SwaggerResourcesProvider swaggerResourcesProvider() {

return () -> {

List resources = new ArrayList();

properties.getRoutes().values().stream()

.forEach(route -> resources.add(createResource(route.getServiceId(), route.getId(), "2.0")));

return resources;

};

}

private SwaggerResource createResource(String name, String location, String version) {

SwaggerResource swaggerResource = new SwaggerResource();

swaggerResource.setName(name);

swaggerResource.setLocation("/" + location + "/v2/api-docs");

swaggerResource.setSwaggerVersion(version);

return swaggerResource;

}

}

Here’s Swagger UI for our sample microservices system available under address http://localhost:8060/swagger-ui.html.

Step 7. Running applications

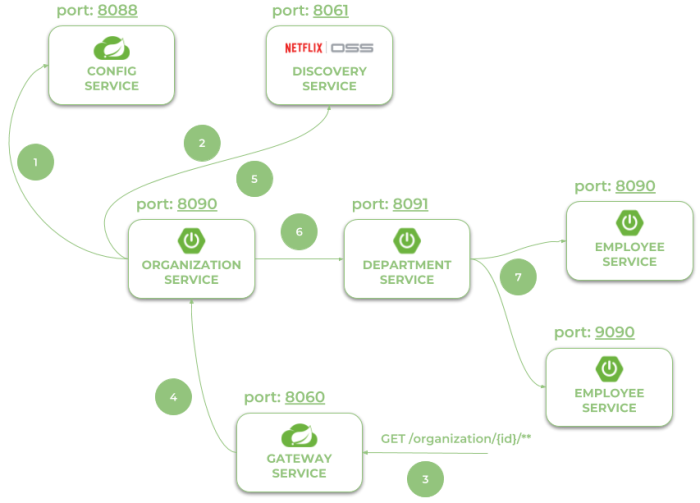

Let’s take a look on the architecture of our system visible on the following diagram. We will discuss it from the organization-service point of view. After starting organization-service connects to config-service available under address localhost:8088 (1). Basing on remote configuration settings it is able to register itself in Eureka (2). When the endpoint of organization-service is invoked by external client via gateway (3) available under address localhost:8060, the request is forwarded to instance of organization-service basing on entries from service discovery (4). Then organization-service lookup for address of department-service in Eureka (5), and call its endpoint (6). Finally department-service calls endpont from employee-service. The request as load balanced between two available instance of employee-service by Ribbon (7).

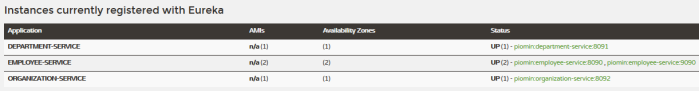

Let’s take a look on the Eureka Dashboard available under address http://localhost:8061. There are four instances of microservices registered there: a single instance of organization-service and department-service, and two instances of employee-service.

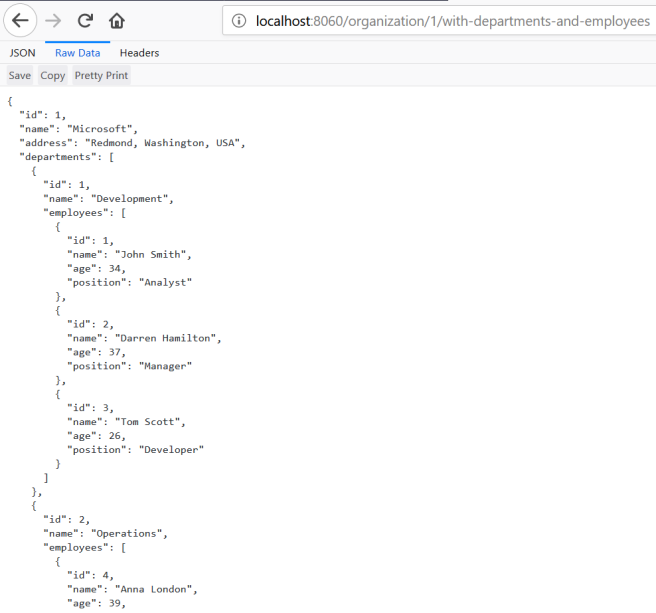

Now, let’s call endpoint http://localhost:8060/organization/1/with-departments-and-employees.

Step 8. Correlating logs between independent microservices using Spring Cloud Sleuth

Correlating logs between different microservice using Spring Cloud Sleuth is very easy. In fact, the only thing you have to do is to add starter spring-cloud-starter-sleuth to the dependencies of every single microservice and gateway.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-sleuth</artifactId> </dependency>

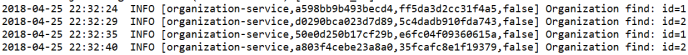

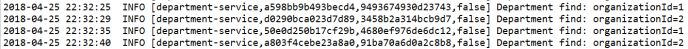

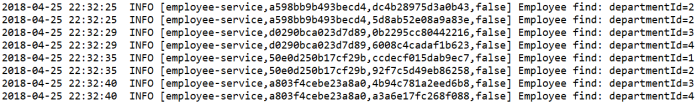

For clarification we will change default log format a little to: %d{yyyy-MM-dd HH:mm:ss} ${LOG_LEVEL_PATTERN:-%5p} %m%n. Here are the logs generated by our three sample miccroservices. There are four entries inside braces [] generated by Spring Cloud Stream. The most important for us is the second entry, which indicates on traceId, that is set once per incoming HTTP request on the edge of the system.

Spring Cloud Microservices at Pivotal Platform

Imagine you have multiple microservices running on different machines as multiple instances. It seems natural to think about the tools that helps you in the process of monitoring and managing all of them. If we add that our microservices are created based on the Spring Cloud framework obviously seems we should look at the Pivotal platform. Here is figure with platform’s architecture download from the main Pivotal’s site.

Although Pivotal Platform can run applications written in many languages it has the best support for Spring Cloud Services and Netflix OSS tools like you can see in the figure above. From the possibilities offered by Pivotal we can take advantage of three ways.

Pivotal Cloud Foundry – solution can be ran on public IaaS or private cloud like AWS, Google Cloud Platform, Microsoft Azure, VMware vSphere, OpenStack.

Pivotal Web Services – hosted cloud-native platform available at pivotal.io site.

PCF Dev – the instance which can be run locally as a a single virtual machine. It offers the opportunity to develop apps using an offline environment which basic services installed like Spring Cloud Services (SCS), MySQL, Redis databases and RabbitMQ broker. If you want to run it locally with SCS you need more than 6GB RAM free.

As a Spring Cloud Services there are available Circuit Breaker (Hystrix), Service Registry (Eureka) and standard Spring Configuration Server based on git configuration.

That’s all I wanted to say about the theory. Let’s move on to practice. On the Pivotal website we have detailed materials on how to set it up, create and deploy a simple microservice based on Spring Cloud solutions. In this article I will try to present the essence collected from these descriptions based on one of my standard examples from the previous posts. As always sample source code is available on GitHub. If you are interested in detailed description of the sample application, microservices and Spring Cloud read my previous articles:

Part 1: Creating microservice using Spring Cloud, Eureka and Zuul

Part 3: Creating Microservices: Circuit Breaker, Fallback and Load Balancing with Spring Cloud

If you have a lot of free RAM you can install PCF Dev on your local workstation. You need to have Virtual Box installed. Then download and install Cloud Foundry Command Line Interface (CF CLI) and PCF Dev. All is described here. Finally you can run command below and take a small break for coffee. Virtual machine needs to downloaded and started.

cf dev start -s scs

For those who do not have RAM enough (like me) there is Pivotal Web Services platform. It is available here. Before use it you have to register on Pivotal site. The rest of the article is identical for both options.

In comparison to previous examples of Spring Cloud based microservices, we need to make some changes. There is one additional dependency inside every microservice’s pom.xml.

<properties>

...

<spring-cloud-services.version>1.4.1.RELEASE</spring-cloud-services.version>

<spring-cloud.version>Dalston.RELEASE</spring-cloud.version>

</properties>

<dependencies>

<dependency>

<groupId>io.pivotal.spring.cloud</groupId>

<artifactId>spring-cloud-services-starter-service-registry</artifactId>

</dependency>

...

</dependencies>

<dependencyManagement>

<dependencies>

<dependency>

<groupId>org.springframework.cloud</groupId>

<artifactId>spring-cloud-dependencies</artifactId>

<version>${spring-cloud.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

<dependency>

<groupId>io.pivotal.spring.cloud</groupId>

<artifactId>spring-cloud-services-dependencies</artifactId>

<version>${spring-cloud-services.version}</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>

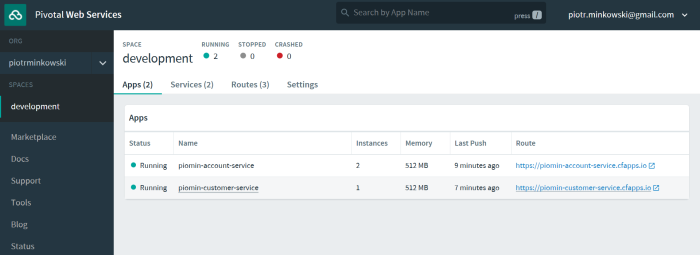

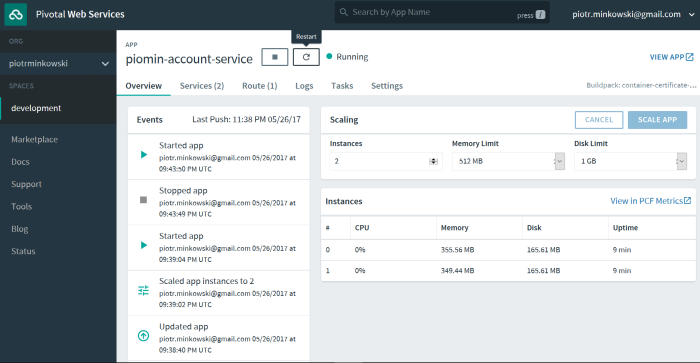

We also use Maven Cloud Foundry plugin cf-maven-plugin for application deployment on Pivotal platform. Here is sample for account-service. We run two instances of that microservice with max memory 512MB. Our application name is piomin-account-service.

<plugin> <groupId>org.cloudfoundry</groupId> <artifactId>cf-maven-plugin</artifactId> <version>1.1.3</version> <configuration> <target>http://api.run.pivotal.io</target> <org>piotrminkowski</org> <space>development</space> <appname>piomin-account-service</appname> <memory>512</memory> <instances>2</instances> <server>cloud-foundry-credentials</server> </configuration> </plugin>

Don’t forget to add credentials configuration into Maven settings.xml file.

<server> <id>cloud-foundry-credentials</id> <username>piotr.minkowski@gmail.com</username> <password>***</password> </server>

Now, when building sample application we to append cf:push command.

mvn clean install cf:push

Here is circuit breaker implementation inside customer-service.

@Service

public class AccountService {

@Autowired

private AccountClient client;

@HystrixCommand(fallbackMethod = "getEmptyList")

public List<Account> getAccounts(Integer customerId) {

return client.getAccounts(customerId);

}

List<Account> getEmptyList(Integer customerId) {

return new ArrayList<>();

}

}

There is randomly generated delay on the account’s service side, so 25% of calls circuit breaker should be activated.

@RequestMapping("/accounts/customer/{customer}")

public List<Account> findByCustomer(@PathVariable("customer") Integer customerId) {

logger.info(String.format("Account.findByCustomer(%s)", customerId));

Random r = new Random();

int rr = r.nextInt(4);

if (rr == 1) {

try {

Thread.sleep(2000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

return accounts.stream().filter(it -> it.getCustomerId().intValue() == customerId.intValue())

.collect(Collectors.toList());

}

After successfully deploying application using Maven cf:push command we can go to Pivotal Web Services console available at https://console.run.pivotal.io/. Here are our two deployed services: two instances of piomin-account-service and one instance of piomin-customer-service.

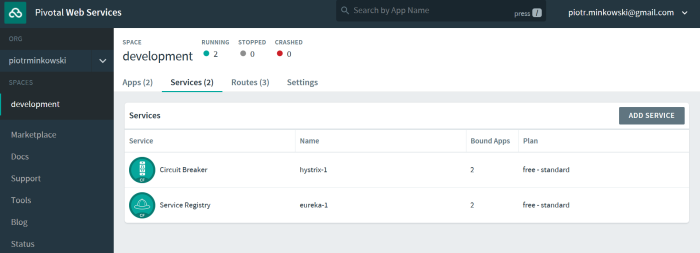

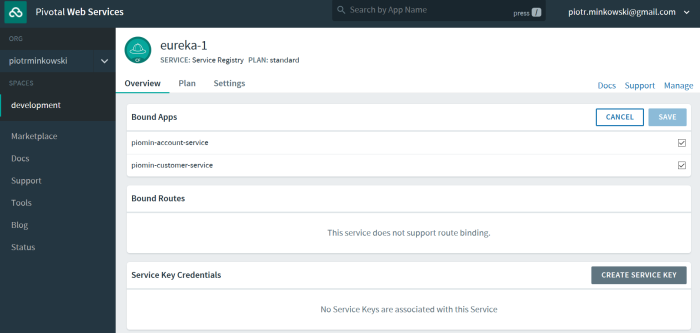

I have also activated Circuit Breaker and Service Registry from Marketplace.

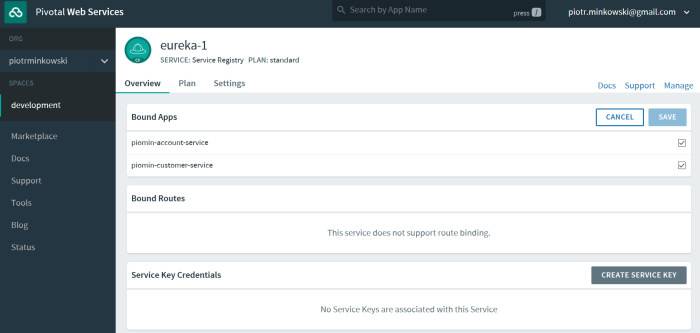

Every application need to be bound to service. To enable it select service, then expand Bound Apps overlap and select checkbox next to each service name.

After this step applications needs to be restarted. It also can be be using web dashboard inside each service.

Finally, all services are registered in Eureka and we can perform some tests using customer endpoint https://piomin-customer-service.cfapps.io/customers/{id}.

Final words

With Pivotal solution we can easily deploy, scale and monitor our microservices. Deployment and scaling can be done using Maven plugin or via web dashboard. On Pivotal there are also available some services prepared especially for microservices needs like service registry, circuit breaker and configuration server. Pivotal is a competition for such solutions like Kubernetes which based on Docker containerization (more about this tools here). It is especially useful if you are creating a microservices based on Spring Boot and Spring Cloud frameworks.