Preface

Serverless is now one of the hottest trend in IT world. A more accurate name for it is Function as a Service (FaaS). Have any of you ever tried to share your APIs deployed in the cloud? Before serverless, I had to create a virtual machine with Linux on the cloud provider’s infrastructure, and then deploy and run that application implemented in, for example nodejs or Java. With serverless, you do not have to write any commands in Linux.

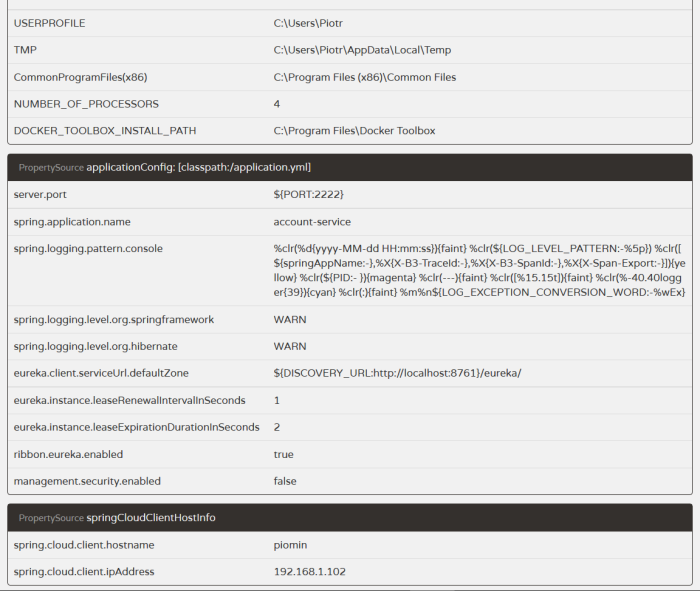

What serverless is different from another very popular topic – Microservices? To illustrate the difference serverless is often referred as nanoservices. For example, if we would like to create a microservice that provides API for CRUD operations on database table, then our APIs had several endpoints for searching (GET/{id}), updating (PUT), remowing (DELETE), inserting (POST) and maybe a few more for searching using different input criteria. According to serverless architecture, all of that endpoints would be independent functions created and deployed separately. While microservice can be built on an on-premise architecture, for example with Spring Boot, serverless is closely related to the cloud infrastructure.

Custom function implementation basing on cloud provider’s tools is really quick and easy. I’ll try to show it on sample functions deployed on AWS Amazon using AWS Lambda. Sample application source code is available on GitHub.

How it works

Here’s AWS Lambda solution description from Amazon site.

AWS Lambda lets you run code without provisioning or managing servers. You pay only for the compute time you consume – there is no charge when your code is not running. With Lambda, you can run code for virtually any type of application or backend service – all with zero administration. Just upload your code and Lambda takes care of everything required to run and scale your code with high availability. You can set up your code to automatically trigger from other AWS services or call it directly from any web or mobile app.

AWS Lambda is a compute platform for many application scenarios. It supports applications written in Node.js, Java, C# and Python. On the platform there are also some services available like DynamoDB – NoSQL database, Kinesis – streaming service, CloudWatch – provides monitoring and logs, Redshift – data warehouse solution, S3 – cloud storage and API Gateway. Every event coming to those services can trigger calling of your Lambda function. You can also interact with those service using AWS Lambda SDK.

Preparation

Let’s finish with the theory, all of us the most like concretes 🙂 First of all, we need to set up AWS account. AWS has web management console available here, but there is also command line client called AWS CLI, which can be downloaded here. There are also some other tools through which we can share our functions on AWS. I will tell you about them later. To be able to use them, including the command line client, we need to generate an access key. Go to web console and select My Security Credentials on your profile, then select Continue to Security Credentials and expand Access Keys. Create you new access key and save it on disc. There are to fields Access Key ID and Secret Access Key. If you would like to use AWS CLI first type aws configure and then you should provide those keys, default region and format (for example JSON or text).

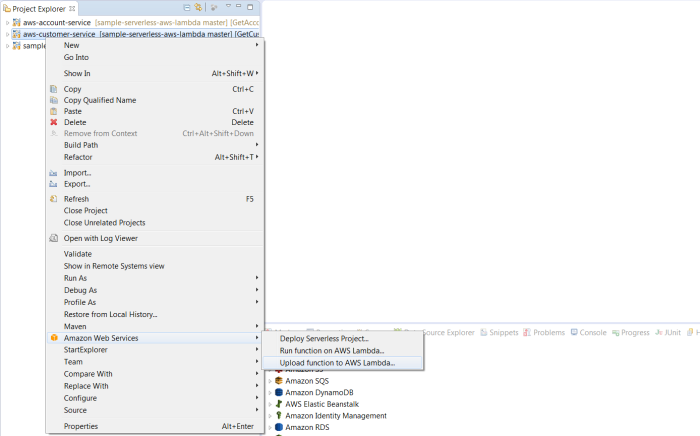

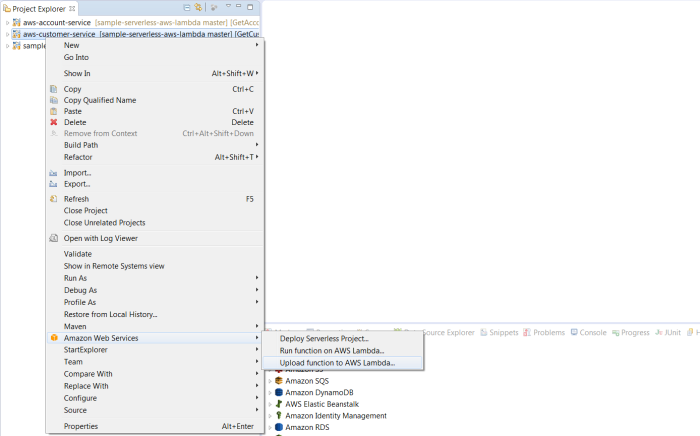

You can use AWS CLI or even web console to deploy your Lambda Function on the cloud. However, I will present you other (in my opinion better :)) solutions. If you are using Eclipse for your development the best option is to download AWS Toolkit plugin. Now, I’m able to upload my function to AWS Lambda or even create or modify table on Amazon DynamoDB. After downloading Eclipse plugin you need to provide Access Key ID and Secret Access Key. You have AWS Management perspective available, where you can see all AWS staff including lambda function, DynamoDB tables, identity management or other service like S3, SNS or SQS. You can create special AWS Java Project or work with standard maven project. Just display project menu by clicking right button on the project and then select Amazon Web Services and Upload function to AWS Lambda…

After selecting Upload function to AWS Lambda… you should window visible below. You can choose region for your deployment (us-east-1 by default), IAM role and what is most the important – name of your lambda function. We can create new function or update the existing one.

Another interesting possibility for uploading function into AWS Lambda is maven plugin. With lambda-maven-plugin we can define security credentials and all definitions of our functions in JSON format. Here’s plugin declaration in pom.xml. The plugin can be invoked during maven project build mvn clean install lambda:deploy-lambda. Dependencies should be attached into the output JAR file – that’s why maven-shade-plugin is used during build.

<plugin>

<groupId>com.github.seanroy</groupId>

<artifactId>lambda-maven-plugin</artifactId>

<version>2.2.1</version>

<configuration>

<accessKey>${aws.accessKey}</accessKey>

<secretKey>${aws.secretKey}</secretKey>

<functionCode>${project.build.directory}/${project.build.finalName}.jar</functionCode>

<version>${project.version}</version>

<lambdaRoleArn>arn:aws:iam::436521214155:role/lambda_basic_execution</lambdaRoleArn>

<s3Bucket>lambda-function-bucket-us-east-1-1498055423860</s3Bucket>

<publish>true</publish>

<forceUpdate>true</forceUpdate>

<lambdaFunctionsJSON>

[

{

"functionName": "PostAccountFunction",

"description": "POST account",

"handler": "pl.piomin.services.aws.account.add.PostAccount",

"timeout": 30,

"memorySize": 256,

"keepAlive": 10

},

{

"functionName": "GetAccountFunction",

"description": "GET account",

"handler": "pl.piomin.services.aws.account.find.GetAccount",

"timeout": 30,

"memorySize": 256,

"keepAlive": 30

},

{

"functionName": "GetAccountsByCustomerIdFunction",

"description": "GET accountsCustomerId",

"handler": "pl.piomin.services.aws.account.find.GetAccountsByCustomerId",

"timeout": 30,

"memorySize": 256,

"keepAlive": 30

}

]

</lambdaFunctionsJSON>

</configuration>

</plugin>

Lambda functions implementation

I implemented sample AWS Lambda functions in Java. Here’s list of dependencies inside pom.xml.

<dependencies>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-events</artifactId>

<version>1.3.0</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-core</artifactId>

<version>1.1.0</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-lambda-java-log4j</artifactId>

<version>1.0.0</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-s3</artifactId>

<version>1.11.152</version>

</dependency>

<dependency>

<groupId>com.amazonaws</groupId>

<artifactId>aws-java-sdk-lambda</artifactId>

<version>1.11.152</version>

</dependency>

</dependencies>

Every function is connecting to Amazon DynamoDB. There are two tables created for that sample: account and customer. One customer could have more than one account and this assignment is realized through the customerId field in account table. AWS library for DynamoDB has ORM mapping mechanisms. Here’s Account entity definition. By using annotations we can declare table name, hash key, index and table attributes.

@DynamoDBTable(tableName = "account")

public class Account implements Serializable {

private static final long serialVersionUID = 8331074361667921244L;

private String id;

private String number;

private String customerId;

public Account() {

}

public Account(String id, String number, String customerId) {

this.id = id;

this.number = number;

this.customerId = customerId;

}

@DynamoDBHashKey(attributeName = "id")

@DynamoDBAutoGeneratedKey

public String getId() {

return id;

}

public void setId(String id) {

this.id = id;

}

@DynamoDBAttribute(attributeName = "number")

public String getNumber() {

return number;

}

public void setNumber(String number) {

this.number = number;

}

@DynamoDBIndexHashKey(attributeName = "customerId", globalSecondaryIndexName = "Customer-Index")

public String getCustomerId() {

return customerId;

}

public void setCustomerId(String customerId) {

this.customerId = customerId;

}

}

In the described sample application there are five lambda functions:

PostAccountFunction – it receives Account object from request and insert it into the table

GetAccountFunction – find account by hash key id attribute

GetAccountsByCustomerId – find list of accounts by input customerId

PostCustomerFunction – it receives Customer object from request and insert it into the table

GetCustomerFunction – find customer by hash key id attribute

Every AWS Lambda function handler needs to implement RequestHandler interface with one method handleRequest. Here’s PostAccount handler class. It connects to DynamoDB using Amazon client and creates ORM mapper DynamoDBMapper, which saves input entity in database.

public class PostAccount implements RequestHandler<Account, Account> {

private DynamoDBMapper mapper;

public PostAccount() {

AmazonDynamoDBClient client = new AmazonDynamoDBClient();

client.setRegion(Region.getRegion(Regions.US_EAST_1));

mapper = new DynamoDBMapper(client);

}

@Override

public Account handleRequest(Account a, Context ctx) {

LambdaLogger logger = ctx.getLogger();

mapper.save(a);

Account r = a;

logger.log("Account: " + r.getId());

return r;

}

}

GetCustomer function not only interacts with DynamoDB, but also invokes GetAccountsByCustomerId function. Maybe this may not be the best example of the need to call another function, because it could directly retrieve data from the account table directly. But I wanted to separate the data layer from the function logic and jut show how invoking of another function works in AWS Lambda cloud.

public class GetCustomer implements RequestHandler<Customer, Customer> {

private DynamoDBMapper mapper;

private AccountService accountService;

public GetCustomer() {

AmazonDynamoDBClient client = new AmazonDynamoDBClient();

client.setRegion(Region.getRegion(Regions.US_EAST_1));

mapper = new DynamoDBMapper(client);

accountService = LambdaInvokerFactory.builder() .lambdaClient(AWSLambdaClientBuilder.defaultClient())

.build(AccountService.class);

}

@Override

public Customer handleRequest(Customer customer, Context ctx) {

LambdaLogger logger = ctx.getLogger();

logger.log("Account: " + customer.getId());

customer = mapper.load(Customer.class, customer.getId());

List<Account> aa = accountService.getAccountsByCustomerId(new Account(customer.getId()));

customer.setAccounts(aa);

return customer;

}

}

AccountService is an interface. It uses @LambdaFunction annotation to declare name of invoked function in the cloud.

public interface AccountService {

@LambdaFunction(functionName = "GetAccountsByCustomerIdFunction")

List<Account> getAccountsByCustomerId(Account account);

}

API Configuration

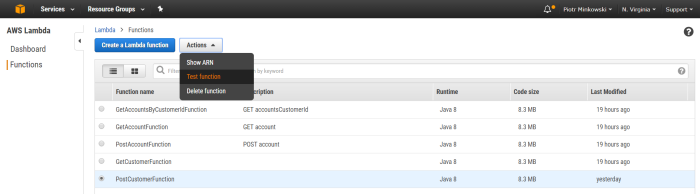

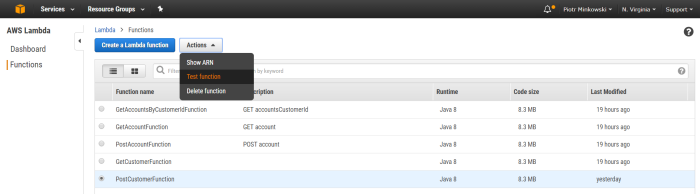

I assume that you have already uploaded your Lambda functions. Now, you can go to AWS Web Console and see the full list of them in the AWS Lambda section. Every function can be tested by selecting item in the functions list and calling Test function action.

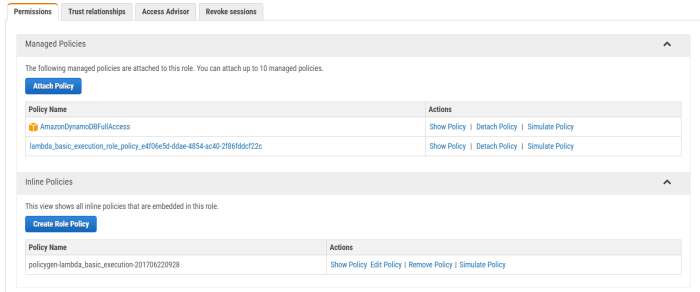

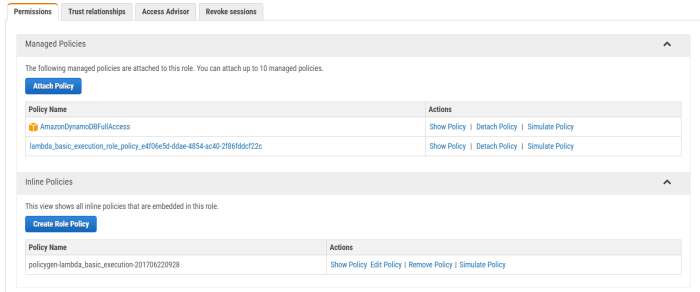

If you did’t configure role permissions you probably got an error while trying to call your lambda function. I attached AmazonDynamoDBFullAccess policy to main lambda_basic_execution role for Amazon DynamoDB connection. Then I created new inline policy to enable invoking GetAccountsByCustomerIdFunction from other lambda function as you can see in the figure below. If you retry your tests now everything works fine.

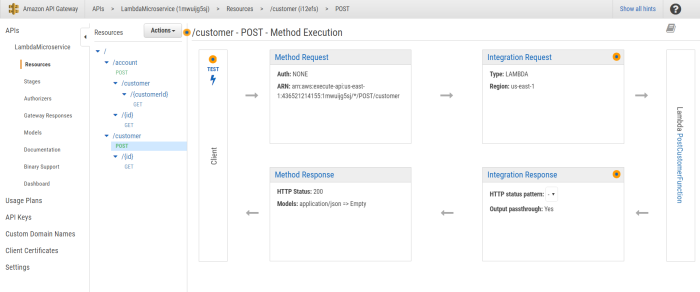

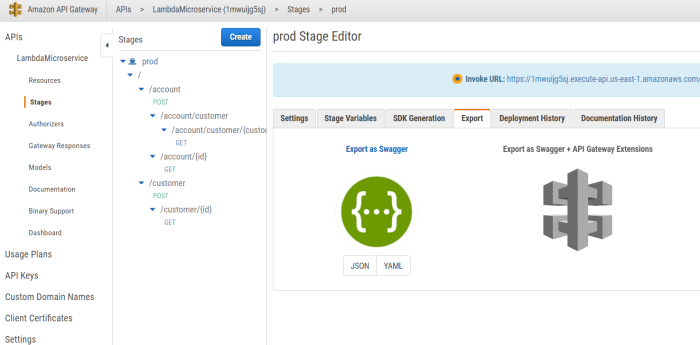

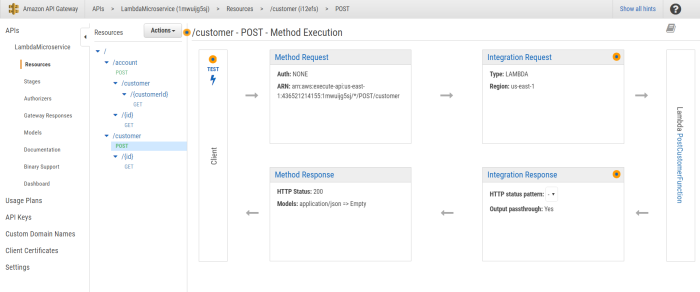

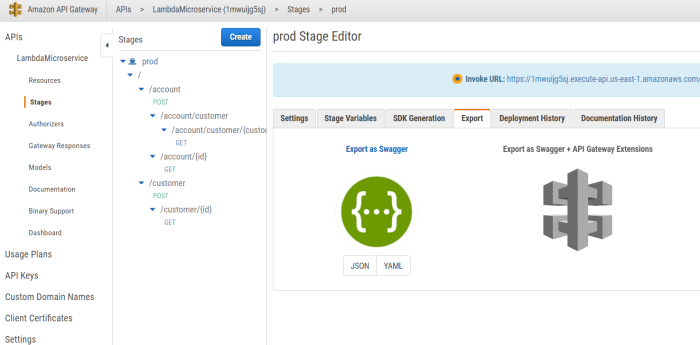

Well, now we are able to test our functions from AWS Lambda Web Test Console. But our main goal is to invoke them from outside client, for example REST client. Fortunately, there is a component called API Gateway which can be configured to proxy our HTTP requests from gateway to Lambda functions. Here’s figure with our API configuration, for example POST /customer is mapped to PostCustomerFunction, GET /customer/{id} is mapped to GetCustomerFunction etc.

You can configure Models definitions and set them as input or output types for API.

{

"title": "Account",

"type": "object",

"properties": {

"id": {

"type": "string"

},

"number": {

"type": "string"

},

"customerId": {

"type": "string"

}

}

}

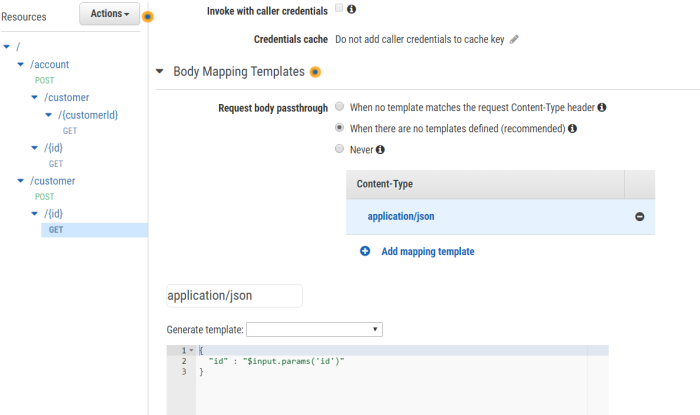

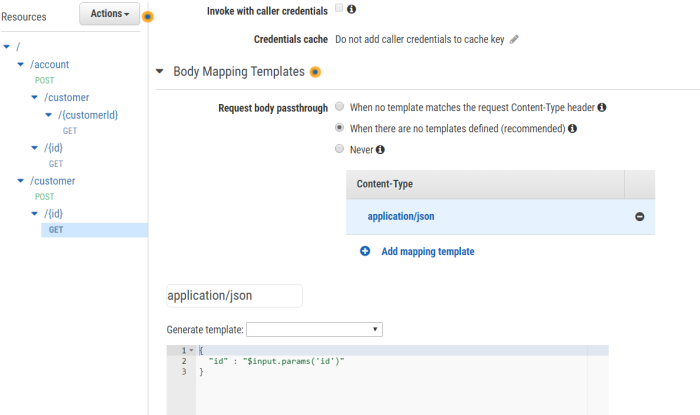

For GET request configuration is a little more complicated. We have to set mapping from path parameter into JSON object which is an input in Lambda functions. Select Integration Request element and then go to Body Mapping Templates section.

Our API can also be exported as Swagger JSON definition. If you are not familiar with take a look on my previous article Microservices API Documentation with Swagger2.

Final words

In my article I described the next steps illustrating how to create an API based on the AWS Lambda serverless solution. I showed the obvious advantages of this solution, such as no need for self-management of servers, the ability to easily deploy applications in the cloud, configuration and monitoring fully based on the solutions provided by the AWS Web Console. You can easily extend my sample with some other services, for example with Kinesis to enable data stream processing. In my opinion, serverless is the perfect solution for exposing simple APIs in the cloud.