I have already provided a quick introduction to building Spring Boot applications with Ethereum and web3j in one of my latest articles Introduction to Blockchain with Java using Ethereum, web3j and Spring Boot. That article has attracted much interest from you, so I decided to describe some more advanced aspects related to Ethereum and web3j. Today I’m going to show how you can implement Ethereum smart contracts in your application. First, let’s define what exactly is smart contract.

Smart contract is just a program that is executed on EVM (Ethereum Virtual Machine). Each contract contains a collection of code (functions) and data. It has an address in the Ethereum blockchain, can interact with other contracts, make decisions, store data, and send ether to others. Ethereum smart contracts are usually written in a language named Solidity, which is a statically typed high level language. Every contract needs to be compiled. After it you can generate source code for your application basing on the compiled binaries. Web3j library provides tools dedicated for that. Before we proceed to the source code let’s discuss an architecture of our sample system.

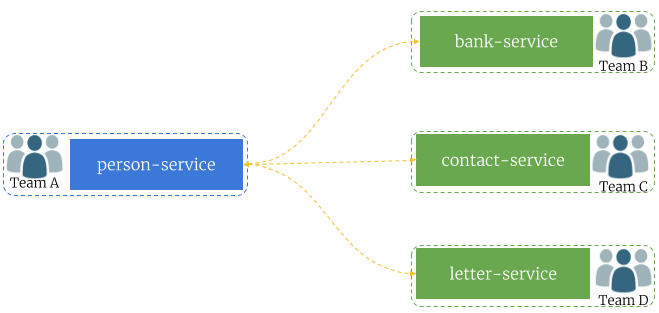

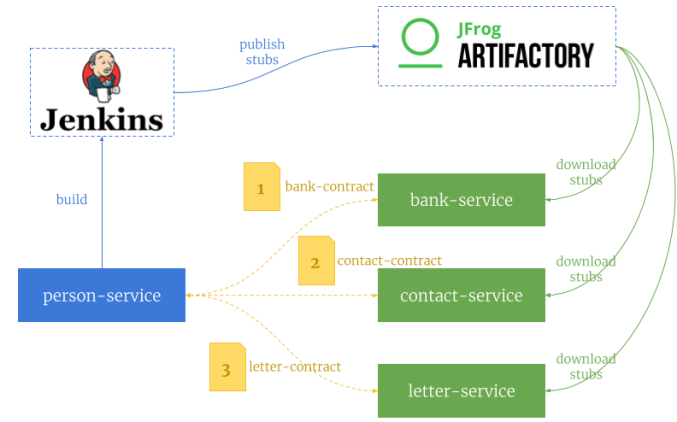

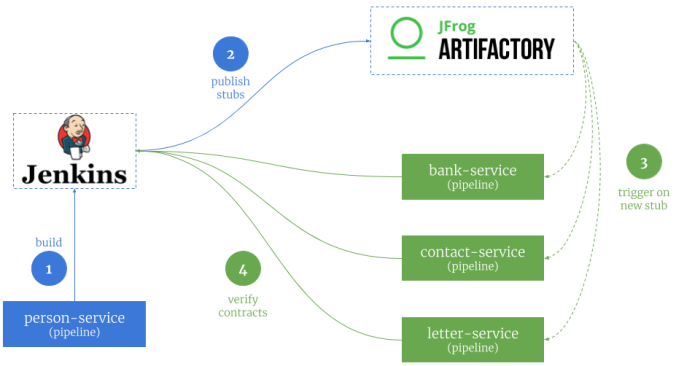

It consists of two independent applications contract-service and transaction-service. The most business logic is performed by contract-service application. It provides methods for creating smart wallets, deploying smart contracts on Ethereum and calling contract’s functions. Application transaction-service is responsible only for performing transaction between third-party and the owner of contract. It gets the owner’s account by calling endpoint exposed by contract-service. Application contract-service observing for transactions performed on the Ethereum node. If it is related to the contract owner’s account application calls function responsible for transferring funds to contract receiver’s account on all contracts signed by this owner. Here’s the diagram that illustrates process described above.

1. Building a smart contract with Solidity

The most popular tool for creating smart contracts in Ethereum is Solidity. Solidity is a contract-oriented, high-level language for implementing smart contracts. It was influenced by C++, Python and JavaScript and is designed to target the Ethereum Virtual Machine (EVM). It is statically typed, supports inheritance, libraries and complex user-defined types among other features. For more information about that language you should refer to Solidity documentation available on site http://solidity.readthedocs.io/.

Our main goal in this article is just to build a simple contract, compile it and generate required source code. That’s why I don’t want to go into the exact implementation details of contracts using Solidity. Here’s the implementation of contract responsible for counting a fee for incoming transaction. On the basis of this calculation it deposits funds on the transaction owner’s account and withdraws funds from sender’s account. This contract is signed between two users. Every one of them has it own smart wallet secured by their credentials. The understanding of this simple contract is very important, so let’s analyze it line after line.

Each contract is described by a percentage of transaction, which goes to receiver’s account (1) and receiver’s account address (2). Two first lines of contract declare variables for storing these parameters: fee of Solidity type uint, and receiver of type address. Both these values are initialized inside contract’s constructor (5). Parameter fee indicates the percentage fee of transaction, that is withdrawn from sender’s account and deposited on the receiver’s account. The line mapping (address => uint) public balances maps addresses of all balances to unsigned integers (3). We have also defines event Sent, which is emitted after every transaction within the contract (4). Function getReceiverBalance return the receiver’s account balance (6). Finally, there is a function sendTrx(...) that can be can be called by external client (7). It is responsible for performing withdrawal and deposit operations basing on the contract’s percentage fee and transaction amount. It requires a little more attention. First, it needs to have payable modifier to able to transfer funds between Ethereum accounts. After that, the transaction amount can be read from msg.value parameter. Then, we call function send on receiver address variable with given amount in Wei, and save this value on the contract’s balance. Additionally, we may sent an event that can be received by client application.

pragma solidity ^0.4.21;

contract TransactionFee {

// (1)

uint public fee;

// (2)

address public receiver;

// (3)

mapping (address => uint) public balances;

// (4)

event Sent(address from, address to, uint amount, bool sent);

// (5)

constructor(address _receiver, uint _fee) public {

receiver = _receiver;

fee = _fee;

}

// (6)

function getReceiverBalance() public view returns(uint) {

return receiver.balance;

}

// (7)

function sendTrx() public payable {

uint value = msg.value * fee / 100;

bool sent = receiver.send(value);

balances[receiver] += (value);

emit Sent(msg.sender, receiver, value, sent);

}

}

Once we have created a contract, we have to compile it and generate source code that can be use inside our application to deploy contract and call its functions. For just a quick check you can use Solidity compiler available online on site https://remix.ethereum.org.

2. Compiling contract and generating source code

Solidity provides up to date docker builds for their compiler. Released version are tagged with stable, while unstable changes from development branch are tagged with nightly. However, that Docker image contains only compiler executable file, so we would have to mount a persistent volume with input file with Solidity contract. Assuming that it is available under directory /home/docker on our Docker machine, we can compile it using the following command. This command creates two files: a binary .bin file, which is the smart contract code in a format the EVM can interpret, and an application binary interface .abi file, which defines the smart contract methods.

$ docker run --rm -v /home/docker:/build ethereum/solc:stable /build/TransactionFee.sol --bin --abi --optimize -o /build

The compilation output files are available under /build on the container, and are persisted inside /home/docker directory. The container is removed after compilation, because it is no needed now. We can generate source code from compiled contract using executable file provided together with Web3j library. It is available under directory ${WEB3J_HOME}/bin. When generating source code using Web3j we should pass location of .bin and .abi files, then set target package name and directory.

$ web3j solidity generate /build/transactionfee.bin /build/transactionfee.abi -p pl.piomin.services.contract.model -o src/main/java/

Web3j executable generates Java source file with Solidity contract name inside a given package. Here are the most important fragments of generated source file.

public class Transactionfee extends Contract {

private static final String BINARY = "608060405234801561..."

public static final String FUNC_GETRECEIVERBALANCE = "getReceiverBalance";

public static final String FUNC_BALANCES = "balances";

public static final String FUNC_SENDTRX = "sendTrx";

public static final String FUNC_FEE = "fee";

public static final String FUNC_RECEIVER = "receiver";

// ...

protected Transactionfee(String contractAddress, Web3j web3j, TransactionManager transactionManager, BigInteger gasPrice, BigInteger gasLimit) {

super(BINARY, contractAddress, web3j, transactionManager, gasPrice, gasLimit);

}

public RemoteCall getReceiverBalance() {

final Function function = new Function(FUNC_GETRECEIVERBALANCE,

Arrays.asList(),

Arrays.asList(new TypeReference() {}));

return executeRemoteCallSingleValueReturn(function, BigInteger.class);

}

public RemoteCall balances(String param0) {

final Function function = new Function(FUNC_BALANCES,

Arrays.asList(new org.web3j.abi.datatypes.Address(param0)),

Arrays.asList(new TypeReference() {}));

return executeRemoteCallSingleValueReturn(function, BigInteger.class);

}

public RemoteCall sendTrx(BigInteger weiValue) {

final Function function = new Function(

FUNC_SENDTRX,

Arrays.asList(),

Collections.emptyList());

return executeRemoteCallTransaction(function, weiValue);

}

public RemoteCall fee() {

final Function function = new Function(FUNC_FEE,

Arrays.asList(),

Arrays.asList(new TypeReference() {}));

return executeRemoteCallSingleValueReturn(function, BigInteger.class);

}

public RemoteCall receiver() {

final Function function = new Function(FUNC_RECEIVER,

Arrays.asList(),

Arrays.<TypeReference>asList(new TypeReference

<Address>() {}));

return executeRemoteCallSingleValueReturn(function, String.class);

}

public static RemoteCall deploy(Web3j web3j, Credentials credentials, BigInteger gasPrice, BigInteger gasLimit, String _receiver, BigInteger _fee) {

String encodedConstructor = FunctionEncoder.encodeConstructor(Arrays.asList(new org.web3j.abi.datatypes.Address(_receiver),

new org.web3j.abi.datatypes.generated.Uint256(_fee)));

return deployRemoteCall(Transactionfee.class, web3j, credentials, gasPrice, gasLimit, BINARY, encodedConstructor);

}

public static RemoteCall deploy(Web3j web3j, TransactionManager transactionManager, BigInteger gasPrice, BigInteger gasLimit, String _receiver, BigInteger _fee) {

String encodedConstructor = FunctionEncoder.encodeConstructor(Arrays.asList(new org.web3j.abi.datatypes.Address(_receiver),

new org.web3j.abi.datatypes.generated.Uint256(_fee)));

return deployRemoteCall(Transactionfee.class, web3j, transactionManager, gasPrice, gasLimit, BINARY, encodedConstructor);

}

// ...

public Observable sentEventObservable(DefaultBlockParameter startBlock, DefaultBlockParameter endBlock) {

EthFilter filter = new EthFilter(startBlock, endBlock, getContractAddress());

filter.addSingleTopic(EventEncoder.encode(SENT_EVENT));

return sentEventObservable(filter);

}

public static Transactionfee load(String contractAddress, Web3j web3j, Credentials credentials, BigInteger gasPrice, BigInteger gasLimit) {

return new Transactionfee(contractAddress, web3j, credentials, gasPrice, gasLimit);

}

public static Transactionfee load(String contractAddress, Web3j web3j, TransactionManager transactionManager, BigInteger gasPrice, BigInteger gasLimit) {

return new Transactionfee(contractAddress, web3j, transactionManager, gasPrice, gasLimit);

}

public static class SentEventResponse {

public Log log;

public String from;

public String to;

public BigInteger amount;

public Boolean sent;

}

}

3. Deploying contract

Once we have successfully generated Java object representing contract inside our application we may proceed to the application development. We will begin from contract-service. First, we will create smart wallet with credentials with sufficient funds for signing contracts as an owner. The following fragment of code is responsible for that, and is invoked just after application boot. You can also see here an implementation of HTTP GET method responsible for returning owner account address.

@PostConstruct

public void init() throws IOException, CipherException, NoSuchAlgorithmException, NoSuchProviderException, InvalidAlgorithmParameterException {

String file = WalletUtils.generateLightNewWalletFile("piot123", null);

credentials = WalletUtils.loadCredentials("piot123", file);

LOGGER.info("Credentials created: file={}, address={}", file, credentials.getAddress());

EthCoinbase coinbase = web3j.ethCoinbase().send();

EthGetTransactionCount transactionCount = web3j.ethGetTransactionCount(coinbase.getAddress(), DefaultBlockParameterName.LATEST).send();

Transaction transaction = Transaction.createEtherTransaction(coinbase.getAddress(), transactionCount.getTransactionCount(), BigInteger.valueOf(20_000_000_000L), BigInteger.valueOf(21_000), credentials.getAddress(),BigInteger.valueOf(25_000_000_000_000_000L));

web3j.ethSendTransaction(transaction).send();

EthGetBalance balance = web3j.ethGetBalance(credentials.getAddress(), DefaultBlockParameterName.LATEST).send();

LOGGER.info("Balance: {}", balance.getBalance().longValue());

}

@GetMapping("/owner")

public String getOwnerAccount() {

return credentials.getAddress();

}

Application contract-service exposes some endpoints that can be called by an external client or the second application in our sample system – transaction-service. The following implementation of POST /contract method performs two actions. First, it creates a new smart wallet with credentials. Then it uses those credentials to sign a smart contract with the address defined in the previous step. To sign a new contract you have to call method deploy from class generated from Solidity definition – Transactionfee. It is responsible for deploying a new instance of contract on the Ethereum node.

private List contracts = new ArrayList();

@PostMapping

public Contract createContract(@RequestBody Contract newContract) throws Exception {

String file = WalletUtils.generateLightNewWalletFile("piot123", null);

Credentials receiverCredentials = WalletUtils.loadCredentials("piot123", file);

LOGGER.info("Credentials created: file={}, address={}", file, credentials.getAddress());

Transactionfee2 contract = Transactionfee2.deploy(web3j, credentials, GAS_PRICE, GAS_LIMIT, receiverCredentials.getAddress(), BigInteger.valueOf(newContract.getFee())).send();

newContract.setReceiver(receiverCredentials.getAddress());

newContract.setAddress(contract.getContractAddress());

contracts.add(contract.getContractAddress());

LOGGER.info("New contract deployed: address={}", contract.getContractAddress());

Optional tr = contract.getTransactionReceipt();

if (tr.isPresent()) {

LOGGER.info("Transaction receipt: from={}, to={}, gas={}", tr.get().getFrom(), tr.get().getTo(), tr.get().getGasUsed().intValue());

}

return newContract;

}

Every contract deployed on Ethereum has its own unique address. The unique address of every created contract is stored by the application. Then the application is able to load all existing contracts using those addresses. The following method is responsible for executing method sentTrx on the selected contract.

public void processContracts(long transactionAmount) {

contracts.forEach(it -> {

Transactionfee contract = Transactionfee.load(it, web3j, credentials, GAS_PRICE, GAS_LIMIT);

try {

TransactionReceipt tr = contract.sendTrx(BigInteger.valueOf(transactionAmount)).send();

LOGGER.info("Transaction receipt: from={}, to={}, gas={}", tr.getFrom(), tr.getTo(), tr.getGasUsed().intValue());

LOGGER.info("Get receiver: {}", contract.getReceiverBalance().send().longValue());

EthFilter filter = new EthFilter(DefaultBlockParameterName.EARLIEST, DefaultBlockParameterName.LATEST, contract.getContractAddress());

web3j.ethLogObservable(filter).subscribe(log -> {

LOGGER.info("Log: {}", log.getData());

});

} catch (Exception e) {

LOGGER.error("Error during contract execution", e);

}

});

}

Application contract-service listens for transactions incoming to Ethereum node, that has been send by transaction-service. If target account of transaction is equal to contracts owner account a given transaction is processed.

@Autowired

Web3j web3j;

@Autowired

ContractService service;

@PostConstruct

public void listen() {

web3j.transactionObservable().subscribe(tx -> {

if (tx.getTo() != null && tx.getTo().equals(service.getOwnerAccount())) {

LOGGER.info("New tx: id={}, block={}, from={}, to={}, value={}", tx.getHash(), tx.getBlockHash(), tx.getFrom(), tx.getTo(), tx.getValue().intValue());

service.processContracts(tx.getValue().longValue());

} else {

LOGGER.info("Not matched: id={}, to={}", tx.getHash(), tx.getTo());

}

});

}

Here’s the source code from transaction-service responsible for transfer funds from third-party account to contracts owner account.

@Value("${contract-service.url}")

String url;

@Autowired

Web3j web3j;

@Autowired

RestTemplate template;

Credentials credentials;

@PostMapping

public String performTransaction(@RequestBody TransactionRequest request) throws Exception {

EthAccounts accounts = web3j.ethAccounts().send();

String owner = template.getForObject(url, String.class);

EthGetTransactionCount transactionCount = web3j.ethGetTransactionCount(accounts.getAccounts().get(request.getFromId()), DefaultBlockParameterName.LATEST).send();

Transaction transaction = Transaction.createEtherTransaction(accounts.getAccounts().get(request.getFromId()), transactionCount.getTransactionCount(), GAS_PRICE, GAS_LIMIT, owner, BigInteger.valueOf(request.getAmount()));

EthSendTransaction response = web3j.ethSendTransaction(transaction).send();

if (response.getError() != null) {

LOGGER.error("Transaction error: {}", response.getError().getMessage());

return "ERR";

}

LOGGER.info("Transaction: {}", response.getResult());

EthGetTransactionReceipt receipt = web3j.ethGetTransactionReceipt(response.getTransactionHash()).send();

if (receipt.getTransactionReceipt().isPresent()) {

TransactionReceipt r = receipt.getTransactionReceipt().get();

LOGGER.info("Tx receipt: from={}, to={}, gas={}, cumulativeGas={}", r.getFrom(), r.getTo(), r.getGasUsed().intValue(), r.getCumulativeGasUsed().intValue());

}

EthGetBalance balance = web3j.ethGetBalance(accounts.getAccounts().get(request.getFromId()), DefaultBlockParameterName.LATEST).send();

LOGGER.info("Balance: address={}, amount={}", accounts.getAccounts().get(request.getFromId()), balance.getBalance().longValue());

balance = web3j.ethGetBalance(owner, DefaultBlockParameterName.LATEST).send();

LOGGER.info("Balance: address={}, amount={}", owner, balance.getBalance().longValue());

return response.getTransactionHash();

}

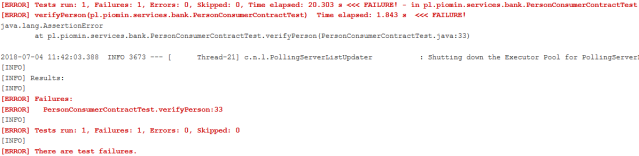

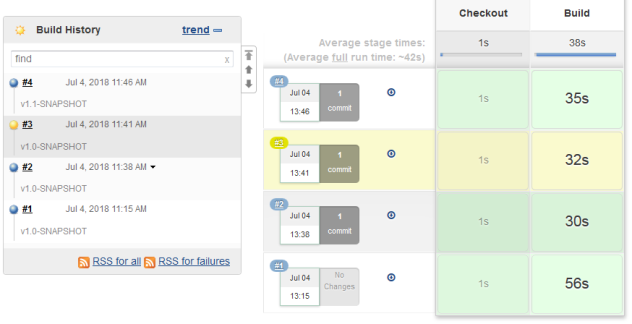

4. Test scenario

To run test scenario we need to have launched:

- Ethereum node in development on Docker container

- Ethereum Geth console client on Docker container

- Instance of

contact-serviceapplication, by default available on port 8090 - Instance of

transaction-serviceapplication, by default available on port 8091

Instruction how to run Ethereum node and Geth client using Docker container is available in my previous article about blockchain Introduction to Blockchain with Java using Ethereum, web3j and Spring Boot.

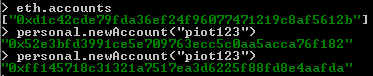

Before starting sample applications we should create at least one test account on Ethereum node. To achieve it we have to execute personal.newAccount Geth command as shown below.

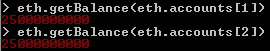

After startup application transaction-service transfer some funds from coinbase account to all other existing accounts.

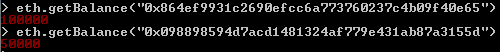

The next step is to create some contracts using owner account created automatically by contract-service on startup. You should call POST /contract method with fee parameter, that specifies percentage of transaction amount transfer from contract owner’s account to contract receiver’s account. Using the following command I have deployed two contracts with 10% and 5%. It means that 10% and 5% of each transaction sent to owner’s account by third-party user is transferred to the accounts generated by POST method. The address of account created by the POST method is returned in the response in the receiver field.

curl -X POST -H "Content-Type: application/json" -d '{"fee":10}' http://localhost:8090/contract

{"fee": 10,"receiver": "0x864ef9931c2690efcc6a773760237c4b09f40e65","address": "0xa6205a746ae0858fa22d6451b794cc977faa507c"}

curl -X POST -H "Content-Type: application/json" -d '{"fee":5}' http://localhost:8090/contract

{"fee": 5,"receiver": "0x098898594d7acd1481324af779e431ab87a3155d","address": "0x9c64d6b0fc01ee055e114a528fb5ad853843cde3"}

If contracts have been successfully deployed the last thing to do is to send a transaction by calling endpoint POST /transaction exposed by transaction-service. The owner account is automatically retrieved from contract-service. You have to set the transaction amount and source account index (means eth.accounts[index]).

curl -X POST -H "Content-Type: application/json" -d '{"amount":1000000,"fromId":1}' http://localhost:8090/transaction

Ok, that’s finally it. Now, the transaction is received by contract-service, which executes function sendTrx(...) on all defined contracts. As a result 10% and 5% of that transaction amount goes to contract receivers.

Sample applications source code is available in repository sample-spring-blockchain-contract (https://github.com/piomin/sample-spring-blockchain-contract.git). Enjoy! 🙂