Spring Cloud and Kubernetes are the popular products applicable to various different use cases. However, when it comes to microservices architecture they are sometimes described as competitive solutions. They are both implementing popular patterns in microservices architecture like service discovery, distributed configuration, load balancing or circuit breaking. Of course, they are doing it differently. Continue reading “Microservices With Spring Cloud Kubernetes”

Tag: Ribbon

The Future of Spring Cloud Microservices After Netflix Era

If somebody would ask you about Spring Cloud, the first thing that comes into your mind will probably be Netflix OSS support. Support for such tools like Eureka, Zuul or Ribbon is provided not only by Spring, but also by some other popular frameworks used for building microservices architecture like Apache Camel, Vert.x or Micronaut. Currently, Spring Cloud Netflix is the most popular project being a part of Spring Cloud. It has around 3.2k stars on GitHub, while the second best has around 1.4k. Therefore, it is quite surprising that Pivotal has announced that most of Spring Cloud Netflix modules are entering maintenance mode. You can read more about in the post published on the Spring blog by Spencer Gibb https://spring.io/blog/2018/12/12/spring-cloud-greenwich-rc1-available-now. Continue reading “The Future of Spring Cloud Microservices After Netflix Era”

Quick Guide to Microservices with Kubernetes, Spring Boot 2.0 and Docker

Here’s the next article in a series of “Quick Guide to…”. This time we will discuss and run examples of Spring Boot microservices on Kubernetes. The structure of that article will be quite similar to this one Quick Guide to Microservices with Spring Boot 2.0, Eureka and Spring Cloud, as they are describing the same aspects of applications development. I’m going to focus on showing you the differences and similarities in development between for Spring Cloud and for Kubernetes. The topics covered in this article are:

- Using Spring Boot 2.0 in cloud-native development

- Providing service discovery for all microservices using Spring Cloud Kubernetes project

- Injecting configuration settings into application pods using Kubernetes Config Maps and Secrets

- Building application images using Docker and deploying them on Kubernetes using YAML configuration files

- Using Spring Cloud Kubernetes together with Zuul proxy to expose a single Swagger API documentation for all microservices

Spring Cloud and Kubernetes may be threaten as a competitive solutions when you build microservices environment. Such components like Eureka, Spring Cloud Config or Zuul provided by Spring Cloud may be replaced by built-in Kubernetes objects like services, config maps, secrets or ingresses. But even if you decide to use Kubernetes components instead of Spring Cloud you can take advantage of some interesting features provided throughout the whole Spring Cloud project.

The one raelly interesting project that helps us in development is Spring Cloud Kubernetes (https://github.com/spring-cloud-incubator/spring-cloud-kubernetes). Although it is still in incubation stage it is definitely worth to dedicating some time to it. It integrates Spring Cloud with Kubernetes. I’ll show you how to use implementation of discovery client, inter-service communication with Ribbon client and Zipkin discovery using Spring Cloud Kubernetes.

Before we proceed to the source code, let’s take a look on the following diagram. It illustrates the architecture of our sample system. It is quite similar to the architecture presented in the already mentioned article about microservices on Spring Cloud. There are three independent applications (employee-service, department-service, organization-service), which communicate between each other through REST API. These Spring Boot microservices use some build-in mechanisms provided by Kubernetes: config maps and secrets for distributed configuration, etcd for service discovery, and ingresses for API gateway.

Let’s proceed to the implementation. Currently, the newest stable version of Spring Cloud is Finchley.RELEASE. This version of spring-cloud-dependencies should be declared as a BOM for dependency management.

<dependencyManagement> <dependencies> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-dependencies</artifactId> <version>Finchley.RELEASE</version> <type>pom</type> <scope>import</scope> </dependency> </dependencies> </dependencyManagement>

Spring Cloud Kubernetes is not released under Spring Cloud Release Trains. So, we need to explicitly define its version. Because we use Spring Boot 2.0 we have to include the newest SNAPSHOT version of spring-cloud-kubernetes artifacts, which is 0.3.0.BUILD-SNAPSHOT.

The source code of sample applications presented in this article is available on GitHub in repository https://github.com/piomin/sample-spring-microservices-kubernetes.git.

Pre-requirements

In order to be able to deploy and test our sample microservices we need to prepare a development environment. We can realize that in the following steps:

- You need at least a single node cluster instance of Kubernetes (Minikube) or Openshift (Minishift) running on your local machine. You should start it and expose embedded Docker client provided by both of them. The detailed intruction for Minishift may be found there: Quick guide to deploying Java apps on OpenShift. You can also use that description to run Minikube – just replace word ‘minishift’ with ‘minikube’. In fact, it does not matter if you choose Kubernetes or Openshift – the next part of this tutorial would be applicable for both of them

- Spring Cloud Kubernetes requires access to Kubernetes API in order to be able to retrieve a list of address of pods running for a single service. If you use Kubernetes you should just execute the following command:

$ kubectl create clusterrolebinding admin --clusterrole=cluster-admin --serviceaccount=default:default

If you deploy your microservices on Minishift you should first enable admin-user addon, then login as a cluster admin, and grant required permissions.

$ minishift addons enable admin-user $ oc login -u system:admin $ oc policy add-role-to-user cluster-reader system:serviceaccount:myproject:default

- All our sample microservices use MongoDB as a backend store. So, you should first run an instance of this database on your node. With Minishift it is quite simple, as you can use predefined templates just by selecting service Mongo on the Catalog list. With Kubernetes the task is more difficult. You have to prepare deployment configuration files by yourself and apply it to the cluster. All the configuration files are available under kubernetes directory inside sample Git repository. To apply the following YAML definition to the cluster you should execute command

kubectl apply -f kubernetes\mongo-deployment.yaml. After it Mongo database would be available under the namemongodbinside Kubernetes cluster.

apiVersion: apps/v1

kind: Deployment

metadata:

name: mongodb

labels:

app: mongodb

spec:

replicas: 1

selector:

matchLabels:

app: mongodb

template:

metadata:

labels:

app: mongodb

spec:

containers:

- name: mongodb

image: mongo:latest

ports:

- containerPort: 27017

env:

- name: MONGO_INITDB_DATABASE

valueFrom:

configMapKeyRef:

name: mongodb

key: database-name

- name: MONGO_INITDB_ROOT_USERNAME

valueFrom:

secretKeyRef:

name: mongodb

key: database-user

- name: MONGO_INITDB_ROOT_PASSWORD

valueFrom:

secretKeyRef:

name: mongodb

key: database-password

---

apiVersion: v1

kind: Service

metadata:

name: mongodb

labels:

app: mongodb

spec:

ports:

- port: 27017

protocol: TCP

selector:

app: mongodb

1. Inject configuration with Config Maps and Secrets

When using Spring Cloud the most obvious choice for realizing distributed configuration in your system is Spring Cloud Config. With Kubernetes you can use Config Map. It holds key-value pairs of configuration data that can be consumed in pods or used to store configuration data. It is used for storing and sharing non-sensitive, unencrypted configuration information. To use sensitive information in your clusters, you must use Secrets. An usage of both these Kubernetes objects can be perfectly demonstrated basing on the example of MongoDB connection settings. Inside Spring Boot application we can easily inject it using environment variables. Here’s fragment of application.yml file with URI configuration.

spring:

data:

mongodb:

uri: mongodb://${MONGO_USERNAME}:${MONGO_PASSWORD}@mongodb/${MONGO_DATABASE}

While username or password are a sensitive fields, a database name is not. So we can place it inside config map.

apiVersion: v1 kind: ConfigMap metadata: name: mongodb data: database-name: microservices

Of course, username and password are defined as secrets.

apiVersion: v1 kind: Secret metadata: name: mongodb type: Opaque data: database-password: MTIzNDU2 database-user: cGlvdHI=

To apply the configuration to Kubernetes cluster we run the following commands.

$ kubectl apply -f kubernetes/mongodb-configmap.yaml $ kubectl apply -f kubernetes/mongodb-secret.yaml

After it we should inject the configuration properties into application’s pods. When defining container configuration inside Deployment YAML file we have to include references to environment variables and secrets as shown below

apiVersion: apps/v1

kind: Deployment

metadata:

name: employee

labels:

app: employee

spec:

replicas: 1

selector:

matchLabels:

app: employee

template:

metadata:

labels:

app: employee

spec:

containers:

- name: employee

image: piomin/employee:1.0

ports:

- containerPort: 8080

env:

- name: MONGO_DATABASE

valueFrom:

configMapKeyRef:

name: mongodb

key: database-name

- name: MONGO_USERNAME

valueFrom:

secretKeyRef:

name: mongodb

key: database-user

- name: MONGO_PASSWORD

valueFrom:

secretKeyRef:

name: mongodb

key: database-password

2. Building service discovery with Kubernetes

We usually running microservices on Kubernetes using Docker containers. One or more containers are grouped by pods, which are the smallest deployable units created and managed in Kubernetes. A good practice is to run only one container inside a single pod. If you would like to scale up your microservice you would just have to increase a number of running pods. All running pods that belong to a single microservice are logically grouped by Kubernetes Service. This service may be visible outside the cluster, and is able to load balance incoming requests between all running pods. The following service definition groups all pods labelled with field app equaled to employee.

apiVersion: v1

kind: Service

metadata:

name: employee

labels:

app: employee

spec:

ports:

- port: 8080

protocol: TCP

selector:

app: employee

Service can be used for accessing application outside Kubernetes cluster or for inter-service communication inside a cluster. However, the communication between microservices can be implemented more comfortable with Spring Cloud Kubernetes. First we need to include the following dependency to project pom.xml.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-kubernetes</artifactId> <version>0.3.0.BUILD-SNAPSHOT</version> </dependency>

Then we should enable discovery client for an application – the same as we have always done for discovery Spring Cloud Netflix Eureka. This allows you to query Kubernetes endpoints (services) by name. This discovery feature is also used by the Spring Cloud Kubernetes Ribbon or Zipkin projects to fetch respectively the list of the pods defined for a microservice to be load balanced or the Zipkin servers available to send the traces or spans.

@SpringBootApplication

@EnableDiscoveryClient

@EnableMongoRepositories

@EnableSwagger2

public class EmployeeApplication {

public static void main(String[] args) {

SpringApplication.run(EmployeeApplication.class, args);

}

// ...

}

The last important thing in this section is to guarantee that Spring application name would be exactly the same as Kubernetes service name for the application. For application employee-service it is employee.

spring:

application:

name: employee

3. Building microservice using Docker and deploying on Kubernetes

There is nothing unusual in our sample microservices. We have included some standard Spring dependencies for building REST-based microservices, integrating with MongoDB and generating API documentation using Swagger2.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-actuator</artifactId> </dependency> <dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger2</artifactId> <version>2.9.2</version> </dependency> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-mongodb</artifactId> </dependency>

In order to integrate with MongoDB we should create interface that extends standard Spring Data CrudRepository.

public interface EmployeeRepository extends CrudRepository {

List findByDepartmentId(Long departmentId);

List findByOrganizationId(Long organizationId);

}

Entity class should be annotated with Mongo @Document and a primary key field with @Id.

@Document(collection = "employee")

public class Employee {

@Id

private String id;

private Long organizationId;

private Long departmentId;

private String name;

private int age;

private String position;

// ...

}

The repository bean has been injected to the controller class. Here’s the full implementation of our REST API inside employee-service.

@RestController

public class EmployeeController {

private static final Logger LOGGER = LoggerFactory.getLogger(EmployeeController.class);

@Autowired

EmployeeRepository repository;

@PostMapping("/")

public Employee add(@RequestBody Employee employee) {

LOGGER.info("Employee add: {}", employee);

return repository.save(employee);

}

@GetMapping("/{id}")

public Employee findById(@PathVariable("id") String id) {

LOGGER.info("Employee find: id={}", id);

return repository.findById(id).get();

}

@GetMapping("/")

public Iterable findAll() {

LOGGER.info("Employee find");

return repository.findAll();

}

@GetMapping("/department/{departmentId}")

public List findByDepartment(@PathVariable("departmentId") Long departmentId) {

LOGGER.info("Employee find: departmentId={}", departmentId);

return repository.findByDepartmentId(departmentId);

}

@GetMapping("/organization/{organizationId}")

public List findByOrganization(@PathVariable("organizationId") Long organizationId) {

LOGGER.info("Employee find: organizationId={}", organizationId);

return repository.findByOrganizationId(organizationId);

}

}

In order to run our microservices on Kubernetes we should first build the whole Maven project with mvn clean install command. Each microservice has Dockerfile placed in the root directory. Here’s Dockerfile definition for employee-service.

FROM openjdk:8-jre-alpine ENV APP_FILE employee-service-1.0-SNAPSHOT.jar ENV APP_HOME /usr/apps EXPOSE 8080 COPY target/$APP_FILE $APP_HOME/ WORKDIR $APP_HOME ENTRYPOINT ["sh", "-c"] CMD ["exec java -jar $APP_FILE"]

Let’s build Docker images for all three sample microservices.

$ cd employee-service $ docker build -t piomin/employee:1.0 . $ cd department-service $ docker build -t piomin/department:1.0 . $ cd organization-service $ docker build -t piomin/organization:1.0 .

The last step is to deploy Docker containers with applications on Kubernetes. To do that just execute commands kubectl apply on YAML configuration files. The sample deployment file for employee-service has been demonstrated in step 1. All required deployment fields are available inside project repository in kubernetes directory.

$ kubectl apply -f kubernetes\employee-deployment.yaml $ kubectl apply -f kubernetes\department-deployment.yaml $ kubectl apply -f kubernetes\organization-deployment.yaml

4. Communication between microservices with Spring Cloud Kubernetes Ribbon

All the microservice are deployed on Kubernetes. Now, it’s worth to discuss some aspects related to inter-service communication. Application employee-service in contrast to other microservices did not invoke any other microservices. Let’s take a look on to other microservices that calls API exposed by employee-service and communicates between each other (organization-service calls department-service API).

First we need to include some additional dependencies to the project. We use Spring Cloud Ribbon and OpenFeign. Alternatively you can also use Spring @LoadBalanced RestTemplate.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-ribbon</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-kubernetes-ribbon</artifactId> <version>0.3.0.BUILD-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-openfeign</artifactId> </dependency>

Here’s the main class of department-service. It enables Feign client using @EnableFeignClients annotation. It works the same as with discovery based on Spring Cloud Netflix Eureka. OpenFeign uses Ribbon for client-side load balancing. Spring Cloud Kubernetes Ribbon provides some beans that forces Ribbon to communicate with Kubernetes API through Fabric8 KubernetesClient.

@SpringBootApplication

@EnableDiscoveryClient

@EnableFeignClients

@EnableMongoRepositories

@EnableSwagger2

public class DepartmentApplication {

public static void main(String[] args) {

SpringApplication.run(DepartmentApplication.class, args);

}

// ...

}

Here’s implementation of Feign client for calling method exposed by employee-service.

@FeignClient(name = "employee")

public interface EmployeeClient {

@GetMapping("/department/{departmentId}")

List findByDepartment(@PathVariable("departmentId") String departmentId);

}

Finally, we have to inject Feign client’s beans to the REST controller. Now, we may call the method defined inside EmployeeClient, which is equivalent to calling REST endpoints.

@RestController

public class DepartmentController {

private static final Logger LOGGER = LoggerFactory.getLogger(DepartmentController.class);

@Autowired

DepartmentRepository repository;

@Autowired

EmployeeClient employeeClient;

// ...

@GetMapping("/organization/{organizationId}/with-employees")

public List findByOrganizationWithEmployees(@PathVariable("organizationId") Long organizationId) {

LOGGER.info("Department find: organizationId={}", organizationId);

List departments = repository.findByOrganizationId(organizationId);

departments.forEach(d -> d.setEmployees(employeeClient.findByDepartment(d.getId())));

return departments;

}

}

5. Building API gateway using Kubernetes Ingress

An Ingress is a collection of rules that allow incoming requests to reach the downstream services. In our microservices architecture ingress is playing a role of an API gateway. To create it we should first prepare YAML description file. The descriptor file should contain the hostname under which the gateway will be available and mapping rules to the downstream services.

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: gateway-ingress

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

backend:

serviceName: default-http-backend

servicePort: 80

rules:

- host: microservices.info

http:

paths:

- path: /employee

backend:

serviceName: employee

servicePort: 8080

- path: /department

backend:

serviceName: department

servicePort: 8080

- path: /organization

backend:

serviceName: organization

servicePort: 8080

You have to execute the following command to apply the configuration visible above to the Kubernetes cluster.

$ kubectl apply -f kubernetes\ingress.yaml

For testing this solution locally we have to insert the mapping between IP address and hostname set in ingress definition inside hosts file as shown below. After it we can services through ingress using defined hostname just like that: http://microservices.info/employee.

192.168.99.100 microservices.info

You can check the details of created ingress just by executing command kubectl describe ing gateway-ingress.

6. Enabling API specification on gateway using Swagger2

Ok, what if we would like to expose single swagger documentation for all microservices deployed on Kubernetes? Well, here the things are getting complicated… We can run container with Swagger UI, and map all paths exposed by the ingress manually, but it is rather not a good solution…

In that case we can use Spring Cloud Kubernetes Ribbon one more time – this time together with Spring Cloud Netflix Zuul. Zuul will act as gateway only for serving Swagger API.

Here’s the list of dependencies used in my gateway-service project.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-zuul</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-kubernetes</artifactId> <version>0.3.0.BUILD-SNAPSHOT</version> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-netflix-ribbon</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-kubernetes-ribbon</artifactId> <version>0.3.0.BUILD-SNAPSHOT</version> </dependency> <dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger-ui</artifactId> <version>2.9.2</version> </dependency> <dependency> <groupId>io.springfox</groupId> <artifactId>springfox-swagger2</artifactId> <version>2.9.2</version> </dependency>

Kubernetes discovery client will detect all services exposed on cluster. We would like to display documentation only for our three microservices. That’s why I defined the following routes for Zuul.

zuul:

routes:

department:

path: /department/**

employee:

path: /employee/**

organization:

path: /organization/**

Now we can use ZuulProperties bean to get routes addresses from Kubernetes discovery, and configure them as Swagger resources as shown below.

@Configuration

public class GatewayApi {

@Autowired

ZuulProperties properties;

@Primary

@Bean

public SwaggerResourcesProvider swaggerResourcesProvider() {

return () -> {

List resources = new ArrayList();

properties.getRoutes().values().stream()

.forEach(route -> resources.add(createResource(route.getId(), "2.0")));

return resources;

};

}

private SwaggerResource createResource(String location, String version) {

SwaggerResource swaggerResource = new SwaggerResource();

swaggerResource.setName(location);

swaggerResource.setLocation("/" + location + "/v2/api-docs");

swaggerResource.setSwaggerVersion(version);

return swaggerResource;

}

}

Application gateway-service should be deployed on cluster the same as other applications. You can the list of running service by executing command kubectl get svc. Swagger documentation is available under address http://192.168.99.100:31237/swagger-ui.html.

Conclusion

I’m actually rooting for Spring Cloud Kubernetes project, which is still at the incubation stage. Kubernetes popularity as a platform is rapidly growing during some last months, but it still has some weaknesses. One of them is inter-service communication. Kubernetes doesn’t give us many mechanisms out-of-the-box, which allows configure more advanced rules. This a reason for creating frameworks for service mesh on Kubernetes like Istio or Linkerd. While these projects are still relatively new solutions, Spring Cloud is stable, opinionated framework. Why not to use to provide service discovery, inter-service communication or load balancing? Thanks to Spring Cloud Kubernetes it is possible.

Mastering Spring Cloud

Let me share with you the result of my last couple months of work – the book published on 26th April by Packt. The book Mastering Spring Cloud is strictly linked to the topics frequently published in this blog – it describes how to build microservices using Spring Cloud framework. I tried to create this book in well-known style of writing from this blog, where I focus on giving you the practical samples of working code without unnecessary small-talk and scribbles 🙂 If you like my style of writing, and in addition you are interested in Spring Cloud framework and microservices, this book is just for you 🙂

The book consists of fifteen chapters, where I have guided you from the basic to the most advanced examples illustrating use cases for almost all projects being a part of Spring Cloud. While creating a blog posts I not always have time to go into all the details related to Spring Cloud. I’m trying to describe a lot of different, interesting trends and solutions in the area of Java development. The book describes many details related to the most important projects of Spring Cloud like service discovery, distributed configuration, inter-service communication, security, logging, testing or continuous delivery. It is available on http://www.packtpub.com site: https://www.packtpub.com/application-development/mastering-spring-cloud. The detailed description of all the topics raised in that book is available on that site.

Personally, I particulary recommend to read the following more advanced subjects described in the book:

- Peer-to-peer replication between multiple instances of Eureka servers, and using zoning mechanism in inter-service communication

- Automatically reloading configuration after changes with Spring Cloud Config push notifications mechanism based on Spring Cloud Bus

- Advanced configuration of inter-service communication with Ribbon client-side load balancer and Feign client

- Enabling SSL secure communication between microservices and basic elements of microservices-based architecture like service discovery or configuration server

- Building messaging microservices based on publish/subscribe communication model including cunsumer grouping, partitioning and scaling with Spring Cloud Stream and message brokers (Apache Kafka, RabbitMQ)

- Setting up continuous delivery for Spring Cloud microservices with Jenkins and Docker

- Using Docker for running Spring Cloud microservices on Kubernetes platform simulated locally by Minikube

- Deploying Spring Cloud microservices on cloud platforms like Pivotal Web Services (Pivotal Cloud Foundry hosted cloud solution) and Heroku

Those examples and many others are available together with this book. At the end, a short description taken from packtpub.com site:

Developing, deploying, and operating cloud applications should be as easy as local applications. This should be the governing principle behind any cloud platform, library, or tool. Spring Cloud–an open-source library–makes it easy to develop JVM applications for the cloud. In this book, you will be introduced to Spring Cloud and will master its features from the application developer’s point of view.

Circuit Breaker, Fallback and Load Balancing with Apache Camel

Apache Camel has just released a new version of their framework – 2.19. In one of my previous articles on DZone I described details about microservices support which was released in the Camel 2.18 version. There are some new features in ServiceCall EIP component, which is responsible for microservice calls. You can see example source code which is based on the sample from my article on DZone. It is available on GitHub under new branch fallback.

In the code fragment below you can see DLS route’s configuration with support for Hystrix circuit breaker, Ribbon load balancer and Consul service discovery and registration. As a service discovery in the route definition you can also use some other solutions instead of Consul like etcd (etcServiceDiscovery) or Kubernetes (kubernetesServiceDiscovery).

from("direct:account")

.to("bean:customerService?method=findById(${header.id})")

.log("Msg: ${body}").enrich("direct:acc", new AggregationStrategyImpl());

from("direct:acc").setBody().constant(null)

.hystrix()

.hystrixConfiguration()

.executionTimeoutInMilliseconds(2000)

.end()

.serviceCall()

.name("account//account")

.component("netty4-http")

.ribbonLoadBalancer("ribbon-1")

.consulServiceDiscovery("http://192.168.99.100:8500")

.end()

.unmarshal(format)

.endHystrix()

.onFallback()

.to("bean:accountFallback?method=getAccounts");

We can easily configure all Hystrix’s parameters just by calling hystrixConfiguration method. In the sample above Hystrix waits max 2 seconds for the response from remote service. In case of timeout fallback @Bean is called. Fallback @Bean implementation is really simple – it return empty list.

@Service

public class AccountFallback {

public List<Account> getAccounts() {

return new ArrayList<>();

}

}

Alternatively, configuration can be implemented using object delarations. Here is service call configuration with Ribbon and Consul. Additionally, we can provide some parameters to Ribbon like client read timeout or max retry attempts. Unfortunately it seems they doesn’t work in this version of Apache Camel 🙂 (you can try to test it by yourself). I hope this will be corrected soon.

ServiceCallConfigurationDefinition def = new ServiceCallConfigurationDefinition();

ConsulConfiguration config = new ConsulConfiguration();

config.setUrl("http://192.168.99.100:8500");

config.setComponent("netty4-http");

ConsulServiceDiscovery discovery = new ConsulServiceDiscovery(config);

RibbonConfiguration c = new RibbonConfiguration();

c.addProperty("MaxAutoRetries", "0");

c.addProperty("MaxAutoRetriesNextServer", "1");

c.addProperty("ReadTimeout", "1000");

c.setClientName("ribbon-1");

RibbonServiceLoadBalancer lb = new RibbonServiceLoadBalancer(c);

lb.setServiceDiscovery(discovery);

def.setComponent("netty4-http");

def.setLoadBalancer(lb);

def.setServiceDiscovery(discovery);

context.setServiceCallConfiguration(def);

I described similar case for Spring Cloud and Netflix OSS in one of my previous article. Just like in the example presented there, I also set here a delay inside account service, which depends on the port on which the microservice was started.

@Value("${port}")

private int port;

public List<Account> findByCustomerId(Integer customerId) {

List<Account> l = new ArrayList<>();

l.add(new Account(1, "1234567890", 4321, customerId));

l.add(new Account(2, "1234567891", 12346, customerId));

if (port%2 == 0) {

try {

Thread.sleep(5000);

} catch (InterruptedException e) {

e.printStackTrace();

}

}

return l;

}

Results for Spring Cloud sample were much more satisfying. The introduced configuration parameters such as read timeout for Ribbon worked and in addition Hystrix was able to automatically redirect a much smaller number of requests to slow service – only 2% of the rest to the non-blocking thread instance for 5 seconds. This shows that Apache Camel still has a few things to improve if wants to compete in microservice’s support with Sprint Cloud framework.

Part 3: Creating Microservices: Circuit Breaker, Fallback and Load Balancing with Spring Cloud

Probably you read some articles about Hystrix and you know in what purpose it is used for. Today I would like to show you an example of exactly how to use it, which gives you the ability to combine with other tools from Netflix OSS stack like Feign and Ribbon. In this I assume that you have basic knowledge on topics such as microservices, load balancing, service discovery. If not I suggest you read some articles about it, for example my short introduction to microservices architecture available here: Part 1: Creating microservice using Spring Cloud, Eureka and Zuul. The code sample used in that article is also also used now. There is also sample source code available on GitHub. For the sample described now see hystrix branch, for basic sample master branch.

Let’s look at some scenarios for using fallback and circuit breaker. We have Customer Service which calls API method from Account Service. There two running instances of Account Service. The requests to Account Service instances are load balanced by Ribbon client 50/50.

Scenario 1

Hystrix is disabled for Feign client (1), auto retries mechanism is disabled for Ribbon client on local instance (2) and other instances (3). Ribbon read timeout is shorter than request max process time (4). This scenario also occurs with the default Spring Cloud configuration without Hystrix. When you call customer test method you sometimes receive full response and sometimes 500 HTTP error code (50/50).

ribbon:

eureka:

enabled: true

MaxAutoRetries: 0 #(2)

MaxAutoRetriesNextServer: 0 #(3)

ReadTimeout: 1000 #(4)

feign:

hystrix:

enabled: false #(1)

Scenario 2

Hystrix is still disabled for Feign client (1), auto retries mechanism is disabled for Ribbon client on local instance (2) but enabled on other instances once (3). You always receive full response. If your request is received by instance with delayed response it is timed out after 1 second and then Ribbon calls another instance – in that case not delayed. You can always change MaxAutoRetries to positive value but gives us nothing in that sample.

ribbon:

eureka:

enabled: true

MaxAutoRetries: 0 #(2)

MaxAutoRetriesNextServer: 1 #(3)

ReadTimeout: 1000 #(4)

feign:

hystrix:

enabled: false #(1)

Scenario 3

Here is not a very elegant solution to the problem. We set ReadTimeout on value bigger than delay inside API method (5000 ms).

ribbon:

eureka:

enabled: true

MaxAutoRetries: 0

MaxAutoRetriesNextServer: 0

ReadTimeout: 10000

feign:

hystrix:

enabled: false

Generally configuration from Scenario 2 and 3 is right, you always get the full response. But in some cases you will wait more than 1 second (Scenario 2) or more than 5 seconds (Scenario 3) and delayed instance receives 50% requests from Ribbon client. But fortunately there is Hystrix – circuit breaker.

Scenario 4

Let’s enable Hystrix just by removing feign property. There is no auto retries for Ribbon client (1) and its read timeout (2) is bigger than Hystrix’s timeout (3). 1000ms is also default value for Hystrix timeoutInMilliseconds property. Hystrix circuit breaker and fallback will work for delayed instance of account service. For some first requests you receive fallback response from Hystrix. Then delayed instance will be cut off from requests, most of them will be directed to not delayed instance.

ribbon:

eureka:

enabled: true

MaxAutoRetries: 0 #(1)

MaxAutoRetriesNextServer: 0

ReadTimeout: 2000 #(2)

hystrix:

command:

default:

execution:

isolation:

thread:

timeoutInMilliseconds: 1000 #(3)

Scenario 5

This scenario is a more advanced development of Scenario 4. Now Ribbon timeout (2) is lower than Hystrix timeout (3) and also auto retries mechanism is enabled (1) for local instance and for other instances (4). The result is same as for Scenario 2 and 3 – you receive full response, but Hystrix is enabled and it cuts off delayed instance from future requests.

ribbon:

eureka:

enabled: true

MaxAutoRetries: 3 #(1)

MaxAutoRetriesNextServer: 1 #(4)

ReadTimeout: 1000 #(2)

hystrix:

command:

default:

execution:

isolation:

thread:

timeoutInMilliseconds: 10000 #(3)

I could imagine a few other scenarios. But the idea was just a show differences in circuit breaker and fallback when modifying configuration properties for Feign, Ribbon and Hystrix in application.yml.

Hystrix

Let’s take a closer look on standard Hystrix circuit breaker and usage described in Scenario 4. To enable Hystrix in your Spring Boot application you have to following dependencies to pom.xml. Second step is to add annotation @EnableCircuitBreaker to main application class and also @EnableHystrixDashboard if you would like to have UI dashboard available.

<dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-hystrix</artifactId> </dependency> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-hystrix-dashboard</artifactId> </dependency>

Hystrix fallback is set on Feign client inside customer service.

@FeignClient(value = "account-service", fallback = AccountFallback.class)

public interface AccountClient {

@RequestMapping(method = RequestMethod.GET, value = "/accounts/customer/{customerId}")

List<Account> getAccounts(@PathVariable("customerId") Integer customerId);

}

Fallback implementation is really simple. In this case I just return empty list instead of customer’s account list received from account service.

@Component

public class AccountFallback implements AccountClient {

@Override

public List<Account> getAccounts(Integer customerId) {

List<Account> acc = new ArrayList<Account>();

return acc;

}

}

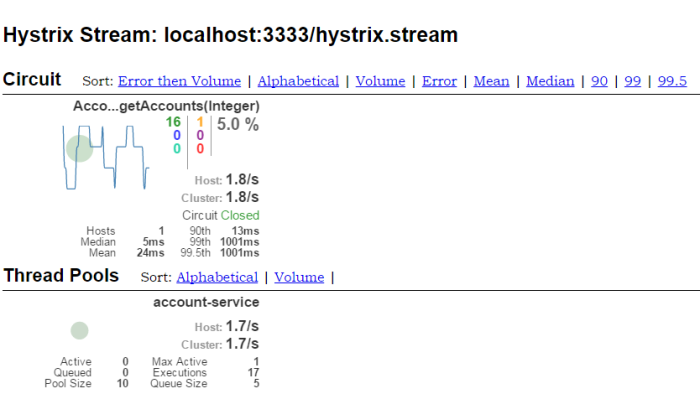

Now, we can perform some tests. Let’s start discovery service, two instances of account service on different ports (-DPORT VM argument during startup) and customer service. Endpoint for tests is /customers/{id}. There is also JUnit test class which sends multiple requests to this enpoint available in customer-service module pl.piomin.microservices.customer.ApiTest.

@RequestMapping("/customers/{id}")

public Customer findById(@PathVariable("id") Integer id) {

logger.info(String.format("Customer.findById(%s)", id));

Customer customer = customers.stream().filter(it -> it.getId().intValue()==id.intValue()).findFirst().get();

List<Account> accounts = accountClient.getAccounts(id);

customer.setAccounts(accounts);

return customer;

}

I enabled Hystrix Dashboard on account-service main class. If you would like to access it call from your web browser http://localhost:2222/hystrix address and then type Hystrix’s stream address from customer-service http://localhost:3333/hystrix.stream. When I run test that sends 1000 requests to customer service about 20 (2%) of them were forwarder to delayed instance of account service, remaining to not delayed instance. Hystrix dashboard during that test is visible below. For more advanced Hystrix configuration refer to its documentation available here.