Since Spring 5 and Spring Boot 2 there is a full support for reactive REST API with Spring WebFlux project. Also project Spring Data systematically includes support for reactive NoSQL databases, and recently for SQL databases too. Since Spring Data Moore we can take advantage of reactive template and repository for Elasticsearch, what I have already described in one of my previous article Reactive Elasticsearch With Spring Boot. Continue reading “Performance Comparison Between Spring MVC and Spring WebFlux with Elasticsearch”

Tag: Spring MVC

Spring REST Docs versus SpringFox Swagger for API documentation

Recently, I have come across some articles and mentions about Spring REST Docs, where it has been present as a better alternative to traditional Swagger docs. Until now, I was always using Swagger for building API documentation, so I decided to try Spring REST Docs. You may even read on the main page of that Spring project (https://spring.io/projects/spring-restdocs) some references to Swagger, for example: “This approach frees you from the limitations of the documentation produced by tools like Swagger”. Are you interested in building API documentation using Spring REST Docs? Let’s take a closer look on that project!

A first difference in comparison to Swagger is a test-driven approach to generating API documentation. Thanks to that Spring REST Docs ensures that the documentation is always generated accurately matches the actual behavior of the API. When using Swagger SpringFox library you just need to enable it for the project and provide some configuration to force it work following your expectations. I have already described usage of Swagger 2 for automated build API documentation for Spring Boot based application in my two previous articles:

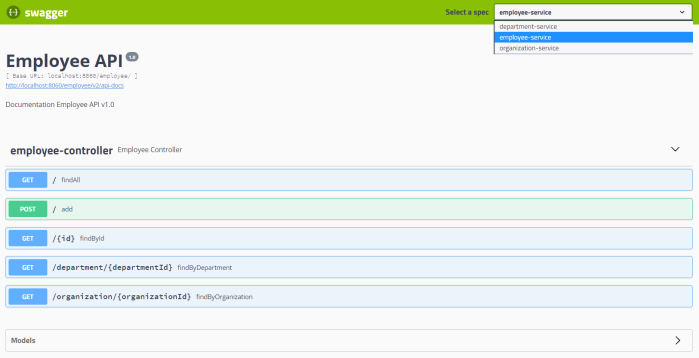

- Microservices API Documentation with Swagger2 – it provides an example of building API documentation for many microservices hidden behind a single API gateway based on Spring Cloud Neflix Zuul

- Versioning REST API with Spring Boot and Swagger – it provides an example of building documentation for different versions of API exposed by single application

The articles mentioned above describe in the details how to use SpringFox Swagger in your Spring Boot application to automatically generate API documentation basing on the source code. Here I’ll give you only a short introduction to that technology, to easily find out differences between usage of Swagger2 and Spring REST Docs.

1. Using Swagger2 with Spring Boot

To enable SpringFox library for your application you need to include the following dependencies to pom.xml.

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger2</artifactId>

<version>2.9.2</version>

</dependency>

<dependency>

<groupId>io.springfox</groupId>

<artifactId>springfox-swagger-ui</artifactId>

<version>2.9.2</version>

</dependency>

Then you should annotate the main or configuration class with @EnableSwagger2. You can also customize the behaviour of SpringFox library by declaring Docket bean.

@Bean

public Docket swaggerEmployeeApi() {

return new Docket(DocumentationType.SWAGGER_2)

.select()

.apis(RequestHandlerSelectors.basePackage("pl.piomin.services.employee.controller"))

.paths(PathSelectors.any())

.build()

.apiInfo(new ApiInfoBuilder().version("1.0").title("Employee API").description("Documentation Employee API v1.0").build());

}

Now, after running the application the documentation is available under context path /v2/api-docs. You can also display it in your web browser using Swagger UI available at site /swagger-ui.html.

It looks easy? Let’s see how to do this with Spring REST Docs.

2. Using Asciidoctor with Spring Boot

There are some other differences between Spring REST Docs and SpringFox Swagger. By default, Spring REST Docs uses Asciidoctor. Asciidoctor processes plain text and produces HTML, styled and layed out to suit your needs. If you prefer, Spring REST Docs can also be configured to use Markdown. This really distinguished it from Swagger, which uses its own notation called OpenAPI Specification.

Spring REST Docs makes use of snippets produced by tests written with Spring MVC’s test framework, Spring WebFlux’s WebTestClient or REST Assured 3. I’ll show you an example based on Spring MVC.

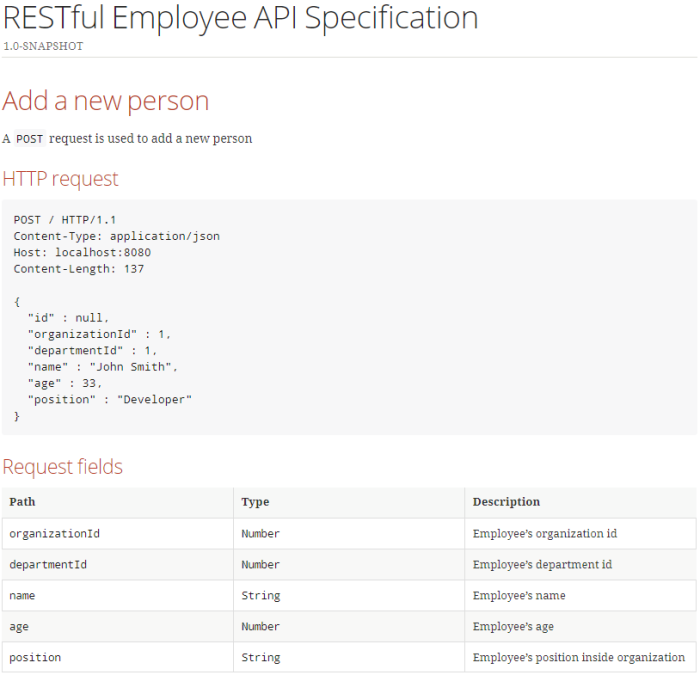

I suggest you begin from creating base Asciidoc file. It should be placed in src/main/asciidoc directory in your application source code. I don’t know if you are familiar with Asciidoctor notation, but it is really intuitive. The sample visible below shows two important things. First we’ll display the version of the project taken from pom.xml. Then we’ll include the snippets generated during JUnit tests by declaring macro called operation containing document name and list of snippets. We can choose between such snippets like curl-request, http-request, http-response, httpie-request, links, request-body, request-fields, response-body, response-fields or path-parameters. The document name is determined by name of the test method in our JUnit test class.

= RESTful Employee API Specification

{project-version}

:doctype: book

== Add a new person

A `POST` request is used to add a new person

operation::add-person[snippets='http-request,request-fields,http-response']

== Find a person by id

A `GET` request is used to find a new person by id

operation::find-person-by-id[snippets='http-request,path-parameters,http-response,response-fields']

The source code fragment with Asciidoc natation is just a template. We would like to generate HTML file, which prettily displays all our automatically generated staff. To achieve it we should enable plugin asciidoctor-maven-plugin in the project’s pom.xml. In order to display Maven project version we need to pass it to the Asciidoc plugin configuration attributes. We also need to spring-restdocs-asciidoctor dependency to that plugin.

<plugin>

<groupId>org.asciidoctor</groupId>

<artifactId>asciidoctor-maven-plugin</artifactId>

<version>1.5.6</version>

<executions>

<execution>

<id>generate-docs</id>

<phase>prepare-package</phase>

<goals>

<goal>process-asciidoc</goal>

</goals>

<configuration>

<backend>html</backend>

<doctype>book</doctype>

<attributes>

<project-version>${project.version}</project-version>

</attributes>

</configuration>

</execution>

</executions>

<dependencies>

<dependency>

<groupId>org.springframework.restdocs</groupId>

<artifactId>spring-restdocs-asciidoctor</artifactId>

<version>2.0.0.RELEASE</version>

</dependency>

</dependencies>

</plugin>

Ok, the documentation is automatically generated during Maven build from our api.adoc file located inside src/main/asciidoc directory. But we still need to develop JUnit API tests that automatically generate required snippets. Let’s do that in the next step.

3. Generating snippets for Spring MVC

First, we should enable Spring REST Docs for our project. To achieve it we have to include the following dependency.

<dependency> <groupId>org.springframework.restdocs</groupId> <artifactId>spring-restdocs-mockmvc</artifactId> <scope>test</scope> </dependency>

Now, all we need to do is to implement JUnit tests. Spring Boot provides an @AutoConfigureRestDocs annotation that allows you to leverage Spring REST Docs in your tests.

In fact, we need to prepare standard Spring MVC test using MockMvc bean. I also mocked some methods implemented by EmployeeRepository. Then, I used some static methods provided by Spring REST Docs with support for generating documentation of request and response payloads. First of those method is document("{method-name}/",...), which is responsible for generating snippets under directory target/generated-snippets/{method-name}, where method name is the name of the test method formatted using kebab-case. I have described all the JSON fields in the requests using requestFields(...) and responseFields(...) methods.

@RunWith(SpringRunner.class)

@WebMvcTest(EmployeeController.class)

@AutoConfigureRestDocs

public class EmployeeControllerTest {

@MockBean

EmployeeRepository repository;

@Autowired

MockMvc mockMvc;

private ObjectMapper mapper = new ObjectMapper();

@Before

public void setUp() {

Employee e = new Employee(1L, 1L, "John Smith", 33, "Developer");

e.setId(1L);

when(repository.add(Mockito.any(Employee.class))).thenReturn(e);

when(repository.findById(1L)).thenReturn(e);

}

@Test

public void addPerson() throws JsonProcessingException, Exception {

Employee employee = new Employee(1L, 1L, "John Smith", 33, "Developer");

mockMvc.perform(post("/").contentType(MediaType.APPLICATION_JSON).content(mapper.writeValueAsString(employee)))

.andExpect(status().isOk())

.andDo(document("{method-name}/", requestFields(

fieldWithPath("id").description("Employee id").ignored(),

fieldWithPath("organizationId").description("Employee's organization id"),

fieldWithPath("departmentId").description("Employee's department id"),

fieldWithPath("name").description("Employee's name"),

fieldWithPath("age").description("Employee's age"),

fieldWithPath("position").description("Employee's position inside organization")

)));

}

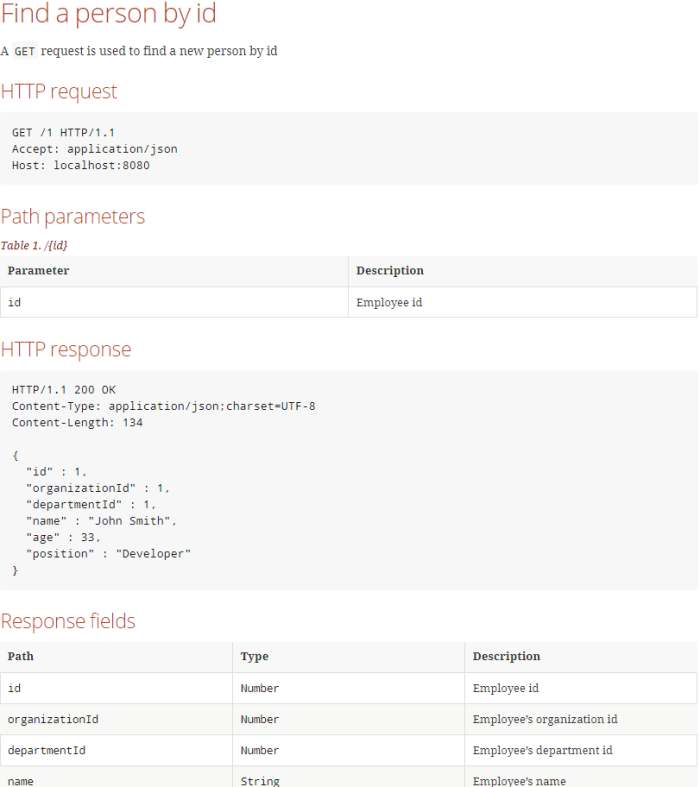

@Test

public void findPersonById() throws JsonProcessingException, Exception {

this.mockMvc.perform(get("/{id}", 1).accept(MediaType.APPLICATION_JSON))

.andExpect(status().isOk())

.andDo(document("{method-name}/", responseFields(

fieldWithPath("id").description("Employee id"),

fieldWithPath("organizationId").description("Employee's organization id"),

fieldWithPath("departmentId").description("Employee's department id"),

fieldWithPath("name").description("Employee's name"),

fieldWithPath("age").description("Employee's age"),

fieldWithPath("position").description("Employee's position inside organization")

), pathParameters(parameterWithName("id").description("Employee id"))));

}

}

If you would like to customize some settings of Spring REST Docs you should provide @TestConfiguration class inside JUnit test class. In the following code fragment you may see an example of such customization. I overridden default snippets output directory from index to test method-specific name, and force generation of sample request and responses using prettyPrint option (single parameter in the separated line).

@TestConfiguration

static class CustomizationConfiguration implements RestDocsMockMvcConfigurationCustomizer {

@Override

public void customize(MockMvcRestDocumentationConfigurer configurer) {

configurer.operationPreprocessors()

.withRequestDefaults(prettyPrint())

.withResponseDefaults(prettyPrint());

}

@Bean

public RestDocumentationResultHandler restDocumentation() {

return MockMvcRestDocumentation.document("{method-name}");

}

}

Now, if you execute mvn clean install on your project you should see the following structure inside your output directory.

4. Viewing and publishing API docs

Once we have successfully built our project, the documentation has been generated. We can display HTML file available at target/generated-docs/api.html. It provides the full documentation of our API.

And the next part…

You may also want to publish it inside your application fat JAR file. If you configure maven-resources-plugin following example vibisle below it would be available under /static/docs directory inside JAR.

<plugin>

<artifactId>maven-resources-plugin</artifactId>

<executions>

<execution>

<id>copy-resources</id>

<phase>prepare-package</phase>

<goals>

<goal>copy-resources</goal>

</goals>

<configuration>

<outputDirectory>

${project.build.outputDirectory}/static/docs

</outputDirectory>

<resources>

<resource>

<directory>

${project.build.directory}/generated-docs

</directory>

</resource>

</resources>

</configuration>

</execution>

</executions>

</plugin>

Conclusion

That’s all what I wanted to show in this article. The sample service generating documentation using Spring REST Docs is available on GitHub under repository https://github.com/piomin/sample-spring-microservices-new/tree/rest-api-docs/employee-service. I’m not sure that Swagger and Spring REST Docs should be treated as a competitive solutions. I use Swagger for simple testing an API on the running application or exposing specification that can be used for automated generation of a client code. Spring REST Docs is rather used for generating documentation that can be published somewhere, and “is accurate, concise, and well-structured. This documentation then allows your users to get the information they need with a minimum of fuss”. I think there is no obstacle to use Spring REST Docs and SpringFox Swagger together in your project in order to provide the most valuable documentation of API exposed by the application.

Exporting metrics to InfluxDB and Prometheus using Spring Boot Actuator

Spring Boot Actuator is one of the most modified projects after release of Spring Boot 2. It has been through the major improvements, which aimed to simplify customization, and include some new features like support for other web technologies, for example the new reactive module – Spring WebFlux. It also adds out-of-the-box support for exporting metrics to InfluxDB – an open source time series database designed to handle high volumes of timestamped data. It is really a great simplification in comparison to the version used with Spring Boot 1.5. You can see for yourself how much by reading one of my previous articles Custom metrics visualization with Grafana and InfluxDB. I described there how to export metrics generated by Spring Boot Actuator to InfluxDB using @ExportMetricsWriter bean. The sample Spring Boot application has been available for that article on GitHub repository sample-spring-graphite (https://github.com/piomin/sample-spring-graphite.git) in the branch master. For the current article, I have created the branch spring2 (https://github.com/piomin/sample-spring-graphite/tree/spring2), which show how to implement the same feature as before using version 2.0 of Spring Boot and Spring Boot Actuator.

Additionally, I’m going to show you how to export the same metrics to another popular monitoring system for efficiently storing timeseries data – Prometheus. There is one major difference between models of exporting metrics between InfluxDB and Prometheus. First of them is a push based system, while the second is a pull based system. So, our sample application needs to to actively send data to the InfluxDB monitoring system, while with Prometheus it only has to expose endpoint that will be fetched for data periodically. Let’s begin from InfluxDB.

1. Running InfluxDB

In the previous article I didn’t write much about this database and its configuration. So, now I say some words about it. First step is typical for my examples – we will run Docker container with InfluxDB. Here’s the simplest command that run InfluxDB on your local machine and exposes HTTP API over 8086 port.

$ docker run -d --name influx -p 8086:8086 influxdb

Once we started that container, you would probably want to login there and execute some commands. Nothing simpler, just run the following command and you would be able to do it. After login you should see the version of InfluxDB running on the target Docker container.

$ docker exec -it influx influx Connected to http://localhost:8086 version 1.5.2 InfluxDB shell version: 1.5.2

The first step is to create database. As you can probably guess, tt can be achieved using command create database. Then switch to the newly created database.

$ create database springboot $ use springboot

Is that semantic looks familiar for you? Yes, InfluxDB provides very similar query language to SQL. It is called InluxQL, and allows you to define SELECT statements, GROUP BY or INTO clauses, and many more. However, before executing such queries, we should have data stored inside database, am I right? Now, let’s proceed to the next steps in order to generate some test metrics.

2. Integrating Spring Boot application with InfluxDB

If you include artifact micrometer-registry-influx to the project’s dependencies, an export to InfluxDB will be enabled automatically. Of course, we also need to include starter spring-boot-starter-actuator.

<dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-actuator</artifactId> </dependency> <dependency> <groupId>io.micrometer</groupId> <artifactId>micrometer-registry-influx</artifactId> </dependency>

The only thing you have to do is to override default address of InfluxDB, because we are running InfluxDB Docker container on VM. By default, Spring Boot Data tries to connect database named mydb. However, I have already created database springboot, so I should also override this default value. In the version 2 of Spring Boot all the configuration properties related to Spring Boot Actuator endpoints has been moved to management.* section.

management:

metrics:

export:

influx:

db: springboot

uri: http://192.168.99.100:8086

You may be surprised a little after starting Spring Boot application with actuator included on the classpath, that it exposes only two HTTP endpoints by default /actuator/info and /actuator/health. That’s why in the newest version of Spring Boot all actuators other than /health and /info are disabled by default, for security purposes. To enable all the actuator enpoints, you have to set property management.endpoints.web.exposure.include to '*'.

In the newest version of Spring Boot monitoring of HTTP metrics has been improved significantly. We can enable collecting all Spring MVC metrics by setting the property management.metrics.web.server.auto-time-requests to true. Alternatively, when it is set to false, you can enable metrics for the specific REST controller by annotating it with @Timed. You can also annotate a single method inside controller, to generate metrics only for specific endpoint.

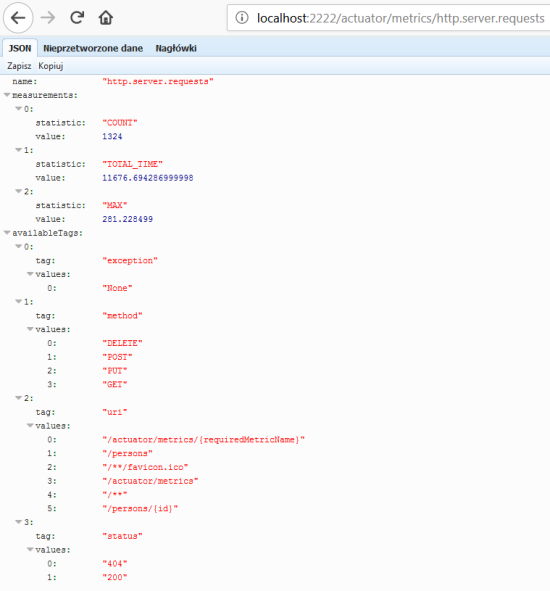

After application boot you may check out the full list of generated metrics by calling endpoint GET /actuator/metrics. By default, metrics for Spring MVC controller are generated under the name http.server.requests. This name can be customized by setting the management.metrics.web.server.requests-metric-name property. If you run the sample application available inside my GitHub repository it is by default available uder port 2222. Now, you can check out the list of statistics generated for a single metric by calling the endpoint GET /actuator/metrics/{requiredMetricName}, as shown in the following picture.

3. Building Spring Boot application

The sample Spring Boot application used for generating metrics consists of a single controller that implements basic CRUD operations for manipulating Person entity, repository bean and entity class. The application connects to MySQL database using Spring Data JPA repository providing CRUD implementation. Here’s the controller class.

@RestController

@Timed

public class PersonController {

protected Logger logger = Logger.getLogger(PersonController.class.getName());

@Autowired

PersonRepository repository;

@GetMapping("/persons/pesel/{pesel}")

public List findByPesel(@PathVariable("pesel") String pesel) {

logger.info(String.format("Person.findByPesel(%s)", pesel));

return repository.findByPesel(pesel);

}

@GetMapping("/persons/{id}")

public Person findById(@PathVariable("id") Integer id) {

logger.info(String.format("Person.findById(%d)", id));

return repository.findById(id).get();

}

@GetMapping("/persons")

public List findAll() {

logger.info(String.format("Person.findAll()"));

return (List) repository.findAll();

}

@PostMapping("/persons")

public Person add(@RequestBody Person person) {

logger.info(String.format("Person.add(%s)", person));

return repository.save(person);

}

@PutMapping("/persons")

public Person update(@RequestBody Person person) {

logger.info(String.format("Person.update(%s)", person));

return repository.save(person);

}

@DeleteMapping("/persons/{id}")

public void remove(@PathVariable("id") Integer id) {

logger.info(String.format("Person.remove(%d)", id));

repository.deleteById(id);

}

}

Before running the application we have setup MySQL database. The most convenient way to achieve it is through MySQL Docker image. Here’s the command that runs container with database grafana, defines user and password, and exposes MySQL 5 on port 33306.

docker run -d --name mysql -e MYSQL_DATABASE=grafana -e MYSQL_USER=grafana -e MYSQL_PASSWORD=grafana -e MYSQL_ALLOW_EMPTY_PASSWORD=yes -p 33306:3306 mysql:5

Then we need to set some database configuration properties on the application side. All the required tables will be created on application’s boot thanks to setting property spring.jpa.properties.hibernate.hbm2ddl.auto to update.

spring:

datasource:

url: jdbc:mysql://192.168.99.100:33306/grafana?useSSL=false

username: grafana

password: grafana

driverClassName: com.mysql.jdbc.Driver

jpa:

properties:

hibernate:

dialect: org.hibernate.dialect.MySQL5Dialect

hbm2ddl.auto: update

4. Generating metrics

After starting the application and the required Docker containers, the only thing that needs to be is done is to generate some test statistics. I created JUnit test class that generates some test data and calls endpoints exposed by the application in a loop. Here’s the fragment of that test method.

int ix = new Random().nextInt(100000);

Person p = new Person();

p.setFirstName("Jan" + ix);

p.setLastName("Testowy" + ix);

p.setPesel(new DecimalFormat("0000000").format(ix) + new DecimalFormat("000").format(ix%100));

p.setAge(ix%100);

p = template.postForObject("http://localhost:2222/persons", p, Person.class);

LOGGER.info("New person: {}", p);

p = template.getForObject("http://localhost:2222/persons/{id}", Person.class, p.getId());

p.setAge(ix%100);

template.put("http://localhost:2222/persons", p);

LOGGER.info("Person updated: {} with age={}", p, ix%100);

template.delete("http://localhost:2222/persons/{id}", p.getId());

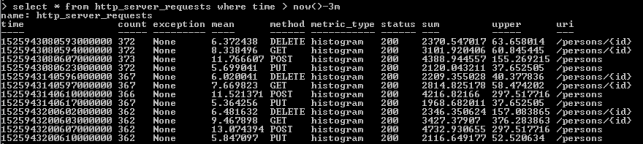

Now, let’s move back to the step 1. As you probably remember, I have shown you how to run the influx client in the InfluxDB Docker container. After some minutes of working test unit should call exposed endpoints many times. We can check out the values of metric http_server_requests stored on Influx. The following query returns list of measurements collected during last 3 minutes.

As you see, all the metrics generated by Spring Boot Actuator are tagged with the following information: method, uri, status and exception. Thanks to that tags we may easily group metrics per signle endpoint including failures and success percentage. Let’s see how to configure and view it in Grafana.

5. Metrics visualization using Grafana

Once we have exported succesfully metrics to InfluxDB, it is time to visualize them using Grafana. First, let’s run Docker container with Grafana.

$ docker run -d --name grafana -p 3000:3000 grafana/grafana

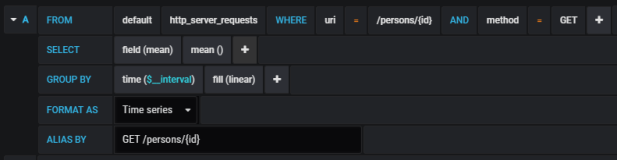

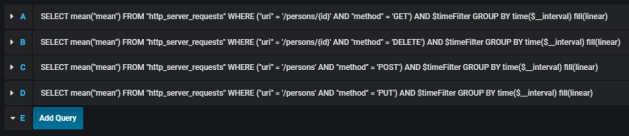

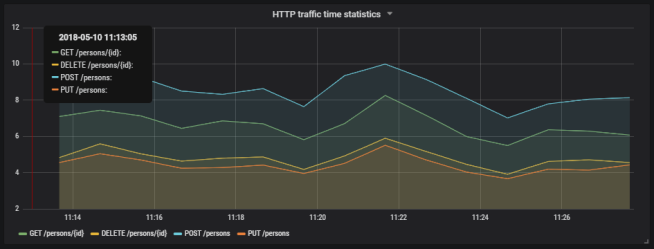

Grafana provides user friedly interface for creating influx queries. We define a graph that visualizes requests processing time per each of calling endpoints and total number of requests received by the application. If we filter the statistics stored in the table http_server_requests by method type and uri, we would collect all metrics generated per single endpoint.

The similar definition should be created for the other endpoints. We will illustrate them all on a single graph.

Here’s the final result.

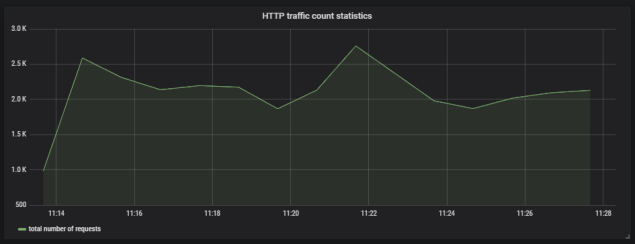

Here’s the graph that visualizes total number of requests sent to the application.

6. Running Prometheus

The most suitable way to run Prometheus locally is obviously through a Docker container. The API is exposed under port 9090. We should also pass the initial configuration file and name of Docker network. Why? You will find all the anwers in the next part of this step description.

docker run -d --name prometheus -p 9090:9090 -v /tmp/prometheus.yml:/etc/prometheus/prometheus.yml --network springboot prom/prometheus

In contrast to InfluxDB, Prometheus pulls metrics from an application. Therefore, we need to enable actuator endpoint that exposes metrics for Prometheus, which is disabled by default. To enable it, set property management.endpoint.prometheus.enabled to true, as shown on the configuration fragment below.

management:

endpoint:

prometheus:

enabled: true

Then we should set the address of actuator endpoint exposed by the application in Prometheus configuration file. A scrape_config section is responsible for specifying a set of targets and parameters describing how to connect with them. By default, Prometheus tries to collect data from defined target endpoint once a minute.

scrape_configs:

- job_name: 'springboot'

metrics_path: '/actuator/prometheus'

static_configs:

- targets: ['person-service:2222']

The similar as for integration with InfluxDB we need to include the following artifact to the project’s dependencies.

<dependency> <groupId>io.micrometer</groupId> <artifactId>micrometer-registry-prometheus</artifactId> </dependency>

In my case, Docker is running on VM, and is available under IP 192.168.99.100. If I would like Prometheus, which is launched as a Docker container, to be able to connect my application, I also should launch it as Docker container. The most convenient way to link two independent containers is through Docker network. If both containers are assigned to the same network, they would be able to connect to each other using container’s name as a target address. Dockerfile is available in the root directory of the sample application’s source code. Second command visible below (docker build) is not required, because the required image piomin/person-service is available on my Docker Hub repository.

$ docker network create springboot $ docker build -t piomin/person-service . $ docker run -d --name person-service -p 2222:2222 --network springboot piomin/person-service

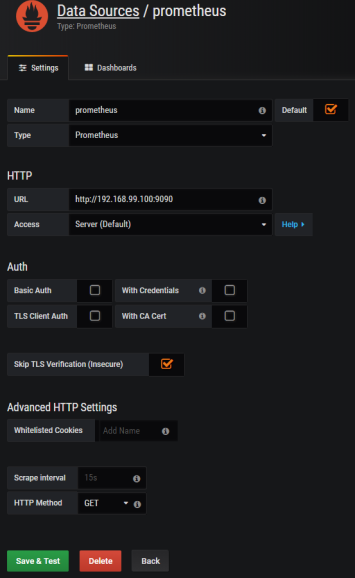

7. Integrate Prometheus with Grafana

Prometheus exposes web console under address 192.168.99.100:9090, where you can specify query and display graph with metrics. However, we can integrate it with Grafana to take an advantage of nicer visualization offered by this tool. First, you should create Prometheus data source.

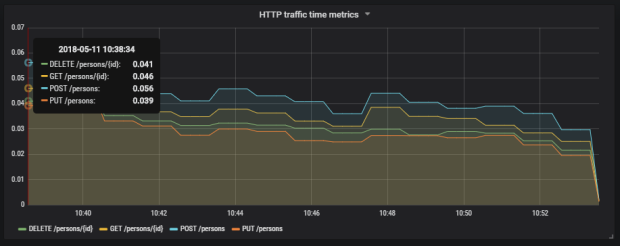

Then we should define queries for collecting metrics from Prometheus API. Spring Boot Actuator exposes three different metrics related to HTTP traffic: http_server_requests_seconds_count, http_server_requests_seconds_sum and http_server_requests_seconds_max. For example, we may calculate per-second average rate of increase of the time series for http_server_requests_seconds_sum, that returns total number of seconds spent on processing requests by using rate() function. The values can be filtered by method and uri using expression inside {}. The following picture illustrates configuration of rate() function per each endpoint.

Here’s the graph.

Summary

The improvement in metrics generation between version 1.5 and 2.0 of Spring Boot is significant. Exporting data to such the popular monitoring systems like InfluxDB or Prometheus is now much easier then before, and does not require any additional development. The metrics relating to HTTP traffic are more detailed and they may be easily associated with specific endpoint, thanks to tags indicating the uri, type and status of HTTP request. I think that modifications in Spring Boot Actuator in relation to the previous version of Spring Boot, could be one of the main motivation to migrate your applications to the newest version.