Hazelcast is the leading in-memory data grid (IMDG) solution. The main idea behind IMDG is to distribute data across many nodes inside cluster. Therefore, it seems to be an ideal solution for running on a cloud platform like Kubernetes, where you can easily scale up or scale down a number of running instances. Since Hazelcast is written in Java you can easily integrate it with your Java application using standard libraries. Something what can also simplify a start with Hazelcast is Spring Boot. You may also use an unofficial library implementing Spring Repositories pattern for Hazelcast – Spring Data Hazelcast. Continue reading “Hazelcast with Spring Boot on Kubernetes”

Tag: Hazelcast

Hazelcast Hot Cache with Striim

I previously introduced some articles about Hazelcast – an open source in memory data grid solution. In the first of them JPA caching with Hazelcast, Hibernate and Spring Boot I described how to set up 2nd level JPA cache with Hazelcast and MySQL. In the second In memory data grid with Hazelcast I showed more advanced sample how to use Hazelcast distributed queries to enable faster data access for Spring Boot application. Using Hazelcast as a cache level between your application and relational database is generally a very good solution under one condition – all changes are going across your application. If a data source is modified by other application which does not use your caching solution it causes problem with outdated data for your application. Did you have encountered this problem in your organization? In my organization we still use relational databases in almost all our systems. Sometimes it causes performance problems, even optimized queries are too slow for real time applications. Relational database is still required, so solutions like Hazelcast can help us.

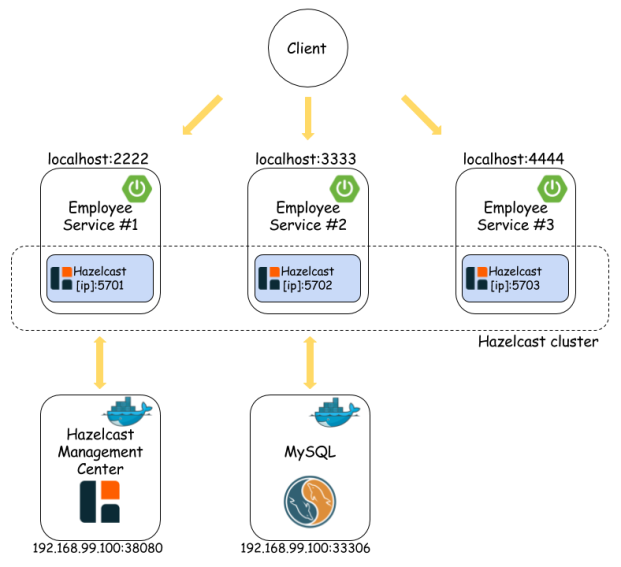

Let’s return to the topic of outdated cache. That’s why we need Striim, a real-time data integration and streaming analytics software platform. The architecture of presented solution is visible on the figure below. We have two applications. The first one employee-service uses Hazelcast as a cache, the second one employee-app performs changes directly to the database. Without such a solution like Striim data changed by employee-app is not visible for employee-service. Striim enables real-time data integration without modifying or slowing down data source. It uses CDC (Change Data Capture) mechanisms for detecting changes performed on data source, by analizing binary logs. It has a support for the most popular transactional databases like Oracle, Microsoft SQL Server and MySQL. Striim has many interesting features, but also one serious drawback – it is not an open source. An alternative for the presented solution, especially when using Oracle database, can be Oracle In-Memory Data Grid with Golden Gate Hot Cache.

I prepared sample application for that article purpose, which is as usual available on GitHub under striim branch. The application employee-service is based on Spring Boot and has embedded Hazelcast client which connects to the cluster and Hazelcast Management Center. If data is not available in the cache the application connects to MySQL database.

1. Starting MySQL and enabling binary log

Let’s start MySQL database using docker.

docker run -d --name mysql -e MYSQL_DATABASE=hz -e MYSQL_USER=hz -e MYSQL_PASSWORD=hz123 -e MYSQL_ALLOW_EMPTY_PASSWORD=yes -p 33306:3306 mysql

Binary log is disabled by default. We have to enable it by including the following lines into mysqld.cnf. The configuration file is available on docker container under /etc/mysql/mysql.conf.d/mysqld.cnf.

log_bin = /var/log/mysql/binary.log expire-logs-days = 14 max-binlog-size = 500M server-id = 1

If you are running MySQL on Docker you should restart your container using docker restart mysql.

2. Starting Hazelcast Dashboard and Striim

Same as for MySQL, I also used Docker.

docker run -d --name striim -p 39080:9080 striim/evalversion docker run -d --name hazelcast-mgmt -p 38080:8080 hazelcast/management-center:3.7.7

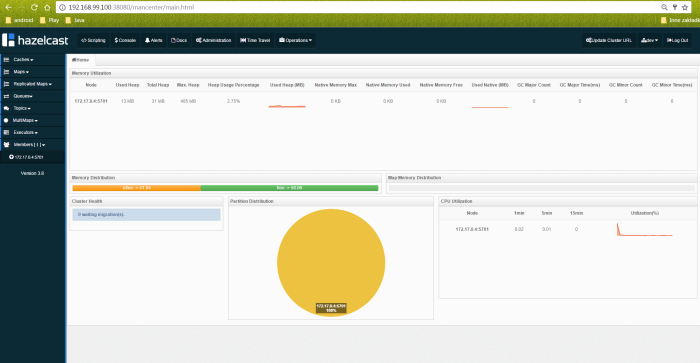

I selected 3.7.7 version of Hazelcast Management Center, because this version is included by default into the Spring Boot release I used in the sample application. Now, you should be able to login into Hazelcast Dashboard available under http://192.168.99.100:38080/mancenter/ and to the Striim Dashboard which is available under http://192.168.99.100:39080/ (admin/admin).

3. Starting sample application

Build sample application with mvn clean install and start using java -jar employee-service-1.0-SNAPSHOT.jar. You can test it by calling one of endpoint:

/employees/person/{id}

/employees/company/{company}

/employees/{id}

Before testing create table employee in MySQL and insert some test data (you can run my test class pl.piomin.services.datagrid.employee.data.AddEmployeeRepositoryTest).

4. Configure entity mapping in Striim

Before creating our first application in Striim we have to provide mapping configuration. The first step is to copy your entity ORM mapping file into docker container filesystem. You can perform it using Striim dashboard or with docker cp command. Here’s my orm.xml file – it is used by Striim HazelcastWriter while putting data into cache.

<entity-mappings xmlns="http://www.eclipse.org/eclipselink/xsds/persistence/orm" version="2.4"> <entity name="employee" class="pl.piomin.services.datagrid.employee.model.Employee"> <table name="hz.employee" /> <attributes> <id name="id" attribute-type="Integer"> <column nullable="false" name="id" /> <generated-value strategy="AUTO" /> </id> <basic name="personId" attribute-type="Integer"> <column nullable="false" name="person_id" /> </basic> <basic name="company" attribute-type="String"> <column name="company" /> </basic> </attributes> </entity> </entity-mappings>

We also have to provide jar with entity class. It should be placed under /opt/Striim/lib directory on Striim docker container. What is important, the fields are public – do not make them private with setters, because it won’t work for HazelcastWriter. After all changes restart your container and proceed to the next steps. For the sample application just build employee-model module and upload to Striim.

public class Employee implements Serializable {

private static final long serialVersionUID = 3214253910554454648L;

public Integer id;

public Integer personId;

public String company;

public Employee() {

}

@Override

public String toString() {

return "Employee [id=" + id + ", personId=" + personId + ", company=" + company + "]";

}

}

5. Configuring MySQL CDC connection on Striim

If all the previous steps are completed we can finally begin to create our application in Striim. When creating a new app select Start with Template, and then MySQL CDC to Hazelcast. Put your MySQL connection data, security credentials and proceed. In addition to connection validation Striim also checks if binary log is enabled.

Then select tables for synchronization with cache.

6. Configuring Hazelcast on Striim

After starting employee-service application you should see the following fragment in the file logs.

Members [1] {

Member [192.168.8.205]:5701 - d568a38a-7531-453a-b7f8-db2be4715132 this

}

This address should be provided as a Hazelcast Cluster URL. We should also put ORM mapping file location and cluster credentials (by default these are dev/dev-pass).

In the next screen you will see ORM mapping visualization and input selection. Your input is MySQL server you defined in the fifth step.

7. Deploy application on Striim

After finishing previous steps you see the flow diagram. I suggest you create log file where all input events will be stored as a JSON. My diagram is visible in the figure below. If your configuration is finished deploy and start application. At this stage I had some problems. For example, if I deploy application after Striim restart I always have to change something and save, otherwise exception during deploy occurs. However, after a long struggle with Striim, I finally succeeded in running the application! So we can start testing.

8. Checking out

I created JUnit test to illustrate cache refresh performed by Striim. Inside this test I invoke employees/company/{company} REST API method and collect entities. Then I modified entities with EmployeeRepository which commits changes directly to the database bypassing Hazelcast cache. I invoke REST API again and compare results with entities collected with previous invoke. Field personId should not be equal with value for previously invoked entity. You also can test it manually by calling REST API endpoint and change something in the database using the client like MySQL Workbench.

@SpringBootTest(webEnvironment = WebEnvironment.DEFINED_PORT)

@RunWith(SpringRunner.class)

public class CacheRefreshEmployeeTest {

protected Logger logger = Logger.getLogger(CacheRefreshEmployeeTest.class.getName());

@Autowired

EmployeeRepository repository;

TestRestTemplate template = new TestRestTemplate();

@Test

public void testModifyAndRefresh() {

Employee[] e = template.getForObject("http://localhost:3333/employees/company/{company}", Employee[].class, "Test001");

for (int i = 0; i < e.length; i++) {

Employee eMod = repository.findOne(e[i].getId());

eMod.setPersonId(eMod.getPersonId()+1);

repository.save(eMod);

}

Employee[] e2 = template.getForObject("http://localhost:3333/employees/company/{company}", Employee[].class, "Test001");

for (int i = 0; i < e2.length; i++) {

Assert.assertNotEquals(e[i].getPersonId(), e2[i].getPersonId());

}

}

}

Here’s the picture with Striim dashboard monitor. We can check out how many events were processed, what is actual memory and CPU usage etc.

Final Thoughts

I have no definite opinion about Striim. On the one hand it is an interesting solution with many integration possibilities and a nice dashboard for configuration and monitoring. But on the other hand it is not free from errors and bugs. My application crashed when an exception was thrown for the lack of a matching serializer for the entity in Hazelcast’s cache. This stopped processing any further events. It may be a deliberate action, but in my opinion subsequent events should be processed as they may affect other tables. The application management with web dashboard is not very comfortable at all. Every time I restarted the container, I had to change something in the configuration, because the application threw not intuitive exception on startup. From this type of application I would expect first of all reliability if the application would require updating of the data on the Hazelcast. However, despite some drawbacks, it is worth a closer look at Striim.

In memory data grid with Hazelcast

In my previous article JPA caching with Hazelcast, Hibernate and Spring Boot I described an example illustrating Hazelcast usage as a solution for Hibernate 2nd level cache. One big disadvantage of that example was an ability by caching entities only by primary key. Some help was the opportunity to cache JPA queries by some other indices. But that did not solve the problem completely, because query could use already cached entities even if they matched the criteria. In that article I’m going to show you smart solution of that problem based on Hazelcast distributed queries.

Spring Boot has an build-in auto configuration for Hazelcast if such a library is available under application classpath and @Bean Config is declared.

@Bean

Config config() {

Config c = new Config();

c.setInstanceName("cache-1");

c.getGroupConfig().setName("dev").setPassword("dev-pass");

ManagementCenterConfig mcc = new ManagementCenterConfig().setUrl("http://192.168.99.100:38080/mancenter").setEnabled(true);

c.setManagementCenterConfig(mcc);

SerializerConfig sc = new SerializerConfig().setTypeClass(Employee.class).setClass(EmployeeSerializer.class);

c.getSerializationConfig().addSerializerConfig(sc);

return c;

}

In the code fragment above we declared cluster name and password credentials, connection parameters to Hazelcast Management Center and entity serialization configuration. Entity is pretty simple – it has @Id and two fields for searching personId and company.

@Entity

public class Employee implements Serializable {

private static final long serialVersionUID = 3214253910554454648L;

@Id

@GeneratedValue

private Integer id;

private Integer personId;

private String company;

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public Integer getPersonId() {

return personId;

}

public void setPersonId(Integer personId) {

this.personId = personId;

}

public String getCompany() {

return company;

}

public void setCompany(String company) {

this.company = company;

}

}

Every entity needs to have serializer declared if it is to be inserted and selected from cache. There are same default serializers available inside Hazelcast library, but I implemented the custom one for our sample. It is based on StreamSerializer and ObjectDataInput.

public class EmployeeSerializer implements StreamSerializer<Employee> {

@Override

public int getTypeId() {

return 1;

}

@Override

public void write(ObjectDataOutput out, Employee employee) throws IOException {

out.writeInt(employee.getId());

out.writeInt(employee.getPersonId());

out.writeUTF(employee.getCompany());

}

@Override

public Employee read(ObjectDataInput in) throws IOException {

Employee e = new Employee();

e.setId(in.readInt());

e.setPersonId(in.readInt());

e.setCompany(in.readUTF());

return e;

}

@Override

public void destroy() {

}

}

There is also DAO interface for interacting with database. It has two searching methods and extends Spring Data CrudRepository.

public interface EmployeeRepository extends CrudRepository<Employee, Integer> {

public Employee findByPersonId(Integer personId);

public List<Employee> findByCompany(String company);

}

In this sample Hazelcast instance is embedded into the application. When starting Spring Boot application we have to provide VM argument -DPORT which is used for exposing service REST API. Hazelcast automatically detect other running member instances and its port will be incremented out of the box. Here’s REST @Controller class with exposed API.

@RestController

public class EmployeeController {

private Logger logger = Logger.getLogger(EmployeeController.class.getName());

@Autowired

EmployeeService service;

@GetMapping("/employees/person/{id}")

public Employee findByPersonId(@PathVariable("id") Integer personId) {

logger.info(String.format("findByPersonId(%d)", personId));

return service.findByPersonId(personId);

}

@GetMapping("/employees/company/{company}")

public List<Employee> findByCompany(@PathVariable("company") String company) {

logger.info(String.format("findByCompany(%s)", company));

return service.findByCompany(company);

}

@GetMapping("/employees/{id}")

public Employee findById(@PathVariable("id") Integer id) {

logger.info(String.format("findById(%d)", id));

return service.findById(id);

}

@PostMapping("/employees")

public Employee add(@RequestBody Employee emp) {

logger.info(String.format("add(%s)", emp));

return service.add(emp);

}

}

@Service is injected into the EmployeeController. Inside EmployeeService there is an simple implementation of switching between Hazelcast cache instance and Spring Data DAO @Repository. In every find method we are trying to find data in the cache and in case it’s not there we are searching it in database and then putting found entity into the cache.

@Service

public class EmployeeService {

private Logger logger = Logger.getLogger(EmployeeService.class.getName());

@Autowired

EmployeeRepository repository;

@Autowired

HazelcastInstance instance;

IMap<Integer, Employee> map;

@PostConstruct

public void init() {

map = instance.getMap("employee");

map.addIndex("company", true);

logger.info("Employees cache: " + map.size());

}

@SuppressWarnings("rawtypes")

public Employee findByPersonId(Integer personId) {

Predicate predicate = Predicates.equal("personId", personId);

logger.info("Employee cache find");

Collection<Employee> ps = map.values(predicate);

logger.info("Employee cached: " + ps);

Optional<Employee> e = ps.stream().findFirst();

if (e.isPresent())

return e.get();

logger.info("Employee cache find");

Employee emp = repository.findByPersonId(personId);

logger.info("Employee: " + emp);

map.put(emp.getId(), emp);

return emp;

}

@SuppressWarnings("rawtypes")

public List<Employee> findByCompany(String company) {

Predicate predicate = Predicates.equal("company", company);

logger.info("Employees cache find");

Collection<Employee> ps = map.values(predicate);

logger.info("Employees cache size: " + ps.size());

if (ps.size() > 0) {

return ps.stream().collect(Collectors.toList());

}

logger.info("Employees find");

List<Employee> e = repository.findByCompany(company);

logger.info("Employees size: " + e.size());

e.parallelStream().forEach(it -> {

map.putIfAbsent(it.getId(), it);

});

return e;

}

public Employee findById(Integer id) {

Employee e = map.get(id);

if (e != null)

return e;

e = repository.findOne(id);

map.put(id, e);

return e;

}

public Employee add(Employee e) {

e = repository.save(e);

map.put(e.getId(), e);

return e;

}

}

If you are interested in running sample application you can clone my repository on GitHub. In person-service module there is an example for my previous article about Hibernate 2nd cache with Hazelcast, in employee-module there is an example for that article.

Testing

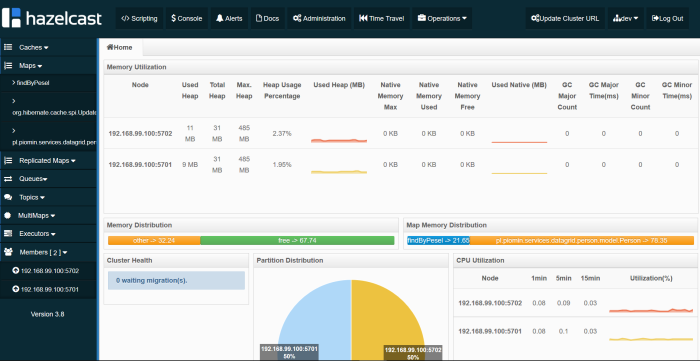

Let’s start three instances of employee service on different ports using VM argument -DPORT. In the first figure visible in the beginning of article these ports are 2222, 3333 and 4444. When starting last third service’s instance you should see the fragment visible below in the application logs. It means that Hazelcast cluster of three members has been set up.

2017-05-09 23:01:48.127 INFO 16432 --- [ration.thread-0] c.h.internal.cluster.ClusterService : [192.168.1.101]:5703 [dev] [3.7.7]

Members [3] {

Member [192.168.1.101]:5701 - 7a8dbf3d-a488-4813-a312-569f0b9dc2ca

Member [192.168.1.101]:5702 - 494fd1ac-341b-451c-b585-1ad58a280fac

Member [192.168.1.101]:5703 - 9750bd3c-9cf8-48b8-a01f-b14c915937c3 this

}

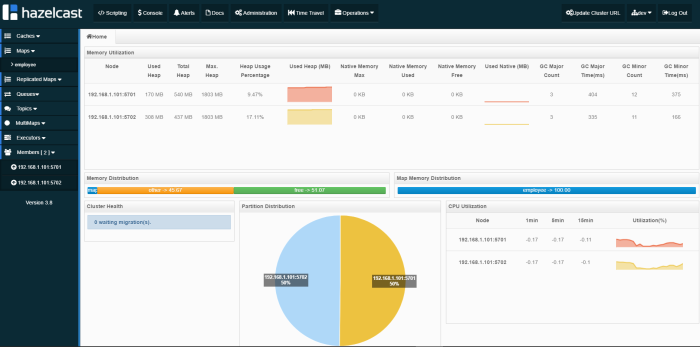

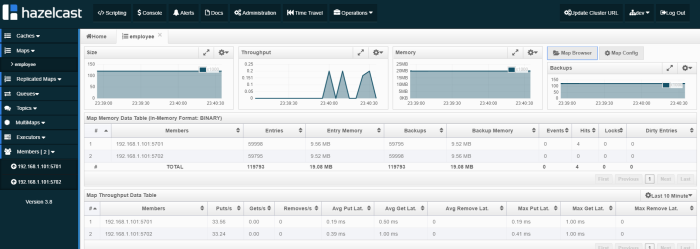

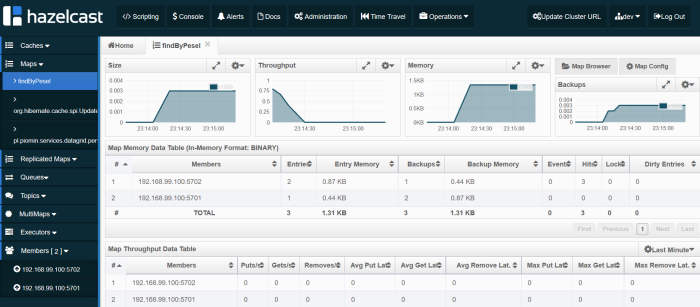

Here is picture from Hazelcast Management Center for two running members (only two members are available in the freeware version of Hazelcast Management Center).

Then run docker containers with MySQL and Hazelcast Management Center.

docker run -d --name mysql -p 33306:3306 mysql docker run -d --name hazelcast-mgmt -p 38080:8080 hazelcast/management-center:latest

Now, you could try to call endpoint http://localhost:/employees/company/{company} on all of your services. You should see that data is cached in the cluster and even if you call endpoint on different service it find entities put into the cache by different service. After several attempts my service instances put about 100k entities into the cache. Distribution between two Hazelcast members is 50% to 50%.

Final Words

Probably we could implement smarter solution for the problem described in that article, but I just wanted to show you the idea. I tried to use Spring Data Hazelcast for that, but I’ve got a problem to run it on Spring Boot application. It has HazelcastRepository interface, which something similar to Spring Data CrudRepository but basing on cached entities in Hazelcast grid and also uses Spring Data KeyValue module. The project is not well document and like I said before it didn’t worked with Spring Boot so I decided to implement my simple solution 🙂

In my local environment, visualized in the beginning of the article, queries on cache were about 10 times faster than similar queries on database. I inserted 2M records into the employee table. Hazelcast data grid could not only be a 2nd level cache but even a middleware between your application and database. If your priority is a performance of queries on large amounts of data and you have a lot of RAM im memory data grid is right solution for you 🙂

JPA caching with Hazelcast, Hibernate and Spring Boot

Preface

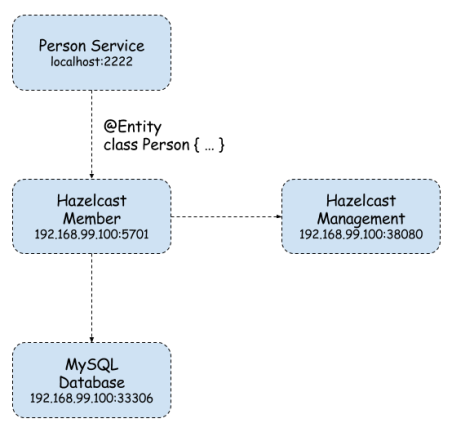

In-Memory Data Grid is an in-memory distributed key-value store that enables caching data using distributed clusters. Do not confuse this solution with in-memory or nosql database. In most cases it is used for performance reasons – all data is stored in RAM not in the disk like in traditional databases. For the first time I had a touch with in-memory data grid while we considering moving to Oracle Coherence in one of organizations I had been working before. The solution really made me curious. Oracle Coherence is obviously a paid solution, but there are also some open source solutions among which the most interesting seem to be Apache Ignite and Hazelcast. Today I’m going to show you how to use Hazelcast for caching data stored in MySQL database accessed by Spring Data DAO objects. Here’s the figure illustrating architecture of presented solution.

Implementation

-

Starting Docker containers

We use three Docker containers. First with MySQL database, second with Hazelcast instance and third for Hazelcast Management Center – UI dashboard for monitoring Hazelcast cluster instances.

docker run -d --name mysql -p 33306:3306 mysql docker run -d --name hazelcast -p 5701:5701 hazelcast/hazelcast docker run -d --name hazelcast-mgmt -p 38080:8080 hazelcast/management-center:latest

If we would like to connect with Hazelcast Management Center from Hazelcast instance we need to place custom hazelcast.xml in /opt/hazelcast catalog inside Docker container. This can be done in two ways, by extending hazelcast base image or just by copying file to existing hazelcast container and restarting it.

docker run -d --name hazelcast -p 5701:5701 hazelcast/hazelcast docker stop hazelcast docker start hazelcast

Here’s the most important Hazelcast’s configuration file fragment.

<hazelcast xmlns="http://www.hazelcast.com/schema/config" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.hazelcast.com/schema/config http://www.hazelcast.com/schema/config/hazelcast-config-3.8.xsd">

<group>

<name>dev</name>

<password>dev-pass</password>

</group>

<management-center enabled="true" update-interval="3">http://192.168.99.100:38080/mancenter</management-center>

...

</hazelcast>

Hazelcast Dashboard is available under http://192.168.99.100:38080/mancenter address. We can monitor there all running cluster members, maps and some other parameters.

-

Maven configuration

Project is based on Spring Boot 1.5.3.RELEASE. We also need to add Spring Web and MySQL Java connector dependencies. Here’s root project pom.xml.

<parent> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-parent</artifactId> <version>1.5.3.RELEASE</version> </parent> ... <dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-web</artifactId> </dependency> <dependency> <groupId>mysql</groupId> <artifactId>mysql-connector-java</artifactId> <scope>runtime</scope> </dependency> ... </dependencies>

Inside person-service module we declared some other dependencies to Hazelcast artifacts and Spring Data JPA. I had to override managed hibernate-core version for Spring Boot 1.5.3.RELEASE, because Hazelcast didn’t worked properly with 5.0.12.Final. Hazelcast needs hibernate-core in 5.0.9.Final version. Otherwise, an exception occurs when starting application.

<dependencies> <dependency> <groupId>org.springframework.boot</groupId> <artifactId>spring-boot-starter-data-jpa</artifactId> </dependency> <dependency> <groupId>com.hazelcast</groupId> <artifactId>hazelcast</artifactId> </dependency> <dependency> <groupId>com.hazelcast</groupId> <artifactId>hazelcast-client</artifactId> </dependency> <dependency> <groupId>com.hazelcast</groupId> <artifactId>hazelcast-hibernate5</artifactId> </dependency> <dependency> <groupId>org.hibernate</groupId> <artifactId>hibernate-core</artifactId> <version>5.0.9.Final</version> </dependency> </dependencies>

-

Hibernate Cache configuration

Probably you can configure it in several different ways, but for me the most suitable solution was inside application.yml. Here’s YAML configurarion file fragment. I enabled L2 Hibernate cache, set Hazelcast native client address, credentials and cache factory class HazelcastCacheRegionFactory. We can also set HazelcastLocalCacheRegionFactory. The differences between them are in performance – local factory is faster since its operations are handled as distributed calls. While if you use HazelcastCacheRegionFactory, you can see your maps on Management Center.

spring:

application:

name: person-service

datasource:

url: jdbc:mysql://192.168.99.100:33306/datagrid?useSSL=false

username: datagrid

password: datagrid

jpa:

properties:

hibernate:

show_sql: true

cache:

use_query_cache: true

use_second_level_cache: true

hazelcast:

use_native_client: true

native_client_address: 192.168.99.100:5701

native_client_group: dev

native_client_password: dev-pass

region:

factory_class: com.hazelcast.hibernate.HazelcastCacheRegionFactory

-

Application code

First, we need to enable caching for Person @Entity.

@Cache(usage = CacheConcurrencyStrategy.READ_WRITE)

@Entity

public class Person implements Serializable {

private static final long serialVersionUID = 3214253910554454648L;

@Id

@GeneratedValue

private Integer id;

private String firstName;

private String lastName;

private String pesel;

private int age;

public Integer getId() {

return id;

}

public void setId(Integer id) {

this.id = id;

}

public String getFirstName() {

return firstName;

}

public void setFirstName(String firstName) {

this.firstName = firstName;

}

public String getLastName() {

return lastName;

}

public void setLastName(String lastName) {

this.lastName = lastName;

}

public String getPesel() {

return pesel;

}

public void setPesel(String pesel) {

this.pesel = pesel;

}

public int getAge() {

return age;

}

public void setAge(int age) {

this.age = age;

}

@Override

public String toString() {

return "Person [id=" + id + ", firstName=" + firstName + ", lastName=" + lastName + ", pesel=" + pesel + "]";

}

}

DAO is implemented using Spring Data CrudRepository. Sample application source code is available on GitHub.

public interface PersonRepository extends CrudRepository<Person, Integer> {

public List<Person> findByPesel(String pesel);

}

Testing

Let’s insert a little more data to the table. You can use my AddPersonRepositoryTest for that. It will insert 1M rows into the person table. Finally, we can call enpoint http://localhost:2222/persons/{id} twice with the same id. For me, it looks like below: 22ms for first call, 3ms for next call which is read from L2 cache. Entity can be cached only by primary key. If you call http://localhost:2222/persons/pesel/{pesel} entity will always be searched bypassing the L2 cache.

2017-05-05 17:07:27.360 DEBUG 9164 --- [nio-2222-exec-9] org.hibernate.SQL : select person0_.id as id1_0_0_, person0_.age as age2_0_0_, person0_.first_name as first_na3_0_0_, person0_.last_name as last_nam4_0_0_, person0_.pesel as pesel5_0_0_ from person person0_ where person0_.id=? Hibernate: select person0_.id as id1_0_0_, person0_.age as age2_0_0_, person0_.first_name as first_na3_0_0_, person0_.last_name as last_nam4_0_0_, person0_.pesel as pesel5_0_0_ from person person0_ where person0_.id=? 2017-05-05 17:07:27.362 DEBUG 9164 --- [nio-2222-exec-9] o.h.l.p.e.p.i.ResultSetProcessorImpl : Starting ResultSet row #0 2017-05-05 17:07:27.362 DEBUG 9164 --- [nio-2222-exec-9] l.p.e.p.i.EntityReferenceInitializerImpl : On call to EntityIdentifierReaderImpl#resolve, EntityKey was already known; should only happen on root returns with an optional identifier specified 2017-05-05 17:07:27.363 DEBUG 9164 --- [nio-2222-exec-9] o.h.engine.internal.TwoPhaseLoad : Resolving associations for [pl.piomin.services.datagrid.person.model.Person#444] 2017-05-05 17:07:27.364 DEBUG 9164 --- [nio-2222-exec-9] o.h.engine.internal.TwoPhaseLoad : Adding entity to second-level cache: [pl.piomin.services.datagrid.person.model.Person#444] 2017-05-05 17:07:27.373 DEBUG 9164 --- [nio-2222-exec-9] o.h.engine.internal.TwoPhaseLoad : Done materializing entity [pl.piomin.services.datagrid.person.model.Person#444] 2017-05-05 17:07:27.373 DEBUG 9164 --- [nio-2222-exec-9] o.h.r.j.i.ResourceRegistryStandardImpl : HHH000387: ResultSet's statement was not registered 2017-05-05 17:07:27.374 DEBUG 9164 --- [nio-2222-exec-9] .l.e.p.AbstractLoadPlanBasedEntityLoader : Done entity load : pl.piomin.services.datagrid.person.model.Person#444 2017-05-05 17:07:27.374 DEBUG 9164 --- [nio-2222-exec-9] o.h.e.t.internal.TransactionImpl : committing 2017-05-05 17:07:30.168 DEBUG 9164 --- [nio-2222-exec-6] o.h.e.t.internal.TransactionImpl : begin 2017-05-05 17:07:30.171 DEBUG 9164 --- [nio-2222-exec-6] o.h.e.t.internal.TransactionImpl : committing

Query Cache

We can enable JPA query caching by marking repository method with @Cacheable annotation and adding @EnableCaching to main class definition.

public interface PersonRepository extends CrudRepository<Person, Integer> {

@Cacheable("findByPesel")

public List<Person> findByPesel(String pesel);

}

In addition to the @EnableCaching annotation we should declare HazelcastIntance and CacheManager beans. As a cache manager HazelcastCacheManager from hazelcast-spring library is used.

@SpringBootApplication

@EnableCaching

public class PersonApplication {

public static void main(String[] args) {

SpringApplication.run(PersonApplication.class, args);

}

@Bean

HazelcastInstance hazelcastInstance() {

ClientConfig config = new ClientConfig();

config.getGroupConfig().setName("dev").setPassword("dev-pass");

config.getNetworkConfig().addAddress("192.168.99.100");

config.setInstanceName("cache-1");

HazelcastInstance instance = HazelcastClient.newHazelcastClient(config);

return instance;

}

@Bean

CacheManager cacheManager() {

return new HazelcastCacheManager(hazelcastInstance());

}

}

Now, we should try find person by PESEL number by calling endpoint http://localhost:2222/persons/pesel/{pesel}. Cached query is stored as a map as you see in the picture below.

Clustering

Before final words let me say a little about clustering, what is the key functionality of Hazelcast in memory data grid. In the previous chapters we based on single Hazelcast instance. Let’s begin from running second container with Hazelcast exposed on different port.

docker run -d --name hazelcast2 -p 5702:5701 hazelcast/hazelcast

Now we should perform one change in hazelcast.xml configuration file. Because data grid is ran inside docker container the public address has to be set. For the first container it is 192.168.99.100:5701, and for second 192.168.99.100:5702, because it is exposed on 5702 port.

<network>

...

<public-address>192.168.99.100:5701</public-address>

...

</network>

When starting person-service application you should see in the logs similar to visible below – connection with two cluster members.

Members [2] {

Member [192.168.99.100]:5702 - 04f790bc-6c2d-4c21-ba8f-7761a4a7422c

Member [192.168.99.100]:5701 - 2ca6e30d-a8a7-46f7-b1fa-37921aaa0e6b

}

All Hazelcast running instances are visible in Management Center.

Conclusion

Caching and clustering with Hazelcast are simple and fast. We can cache JPA entities and queries. Monitoring is realized via Hazelcast Management Center dashboard. One problem for me is that I’m able to cache entities only by primary key. If I would like to find entity by other index like PESEL number I had to cache findByPesel query. Even if entity was cached before by id query will not find it in the cache but perform SQL on database. Only next query call is cached. I’ll show you smart solution for that problem in my next article about that subject In memory data grid with Hazelcast.